Configure Secured Options

This guide shows how to configure secured options to ensure data security in a connection's parameters.

Overview

A connection's parameters fall into two categories: regular or secured options.

The particularity of secured options is that, once set, they will never again be shown to users. Hence, extra care should be taken while specifying them.

There are several types of secured options: a simple field or multiple key-value fields.

Configure a Secured Option

Configure a secured option by proceeding as follows:

-

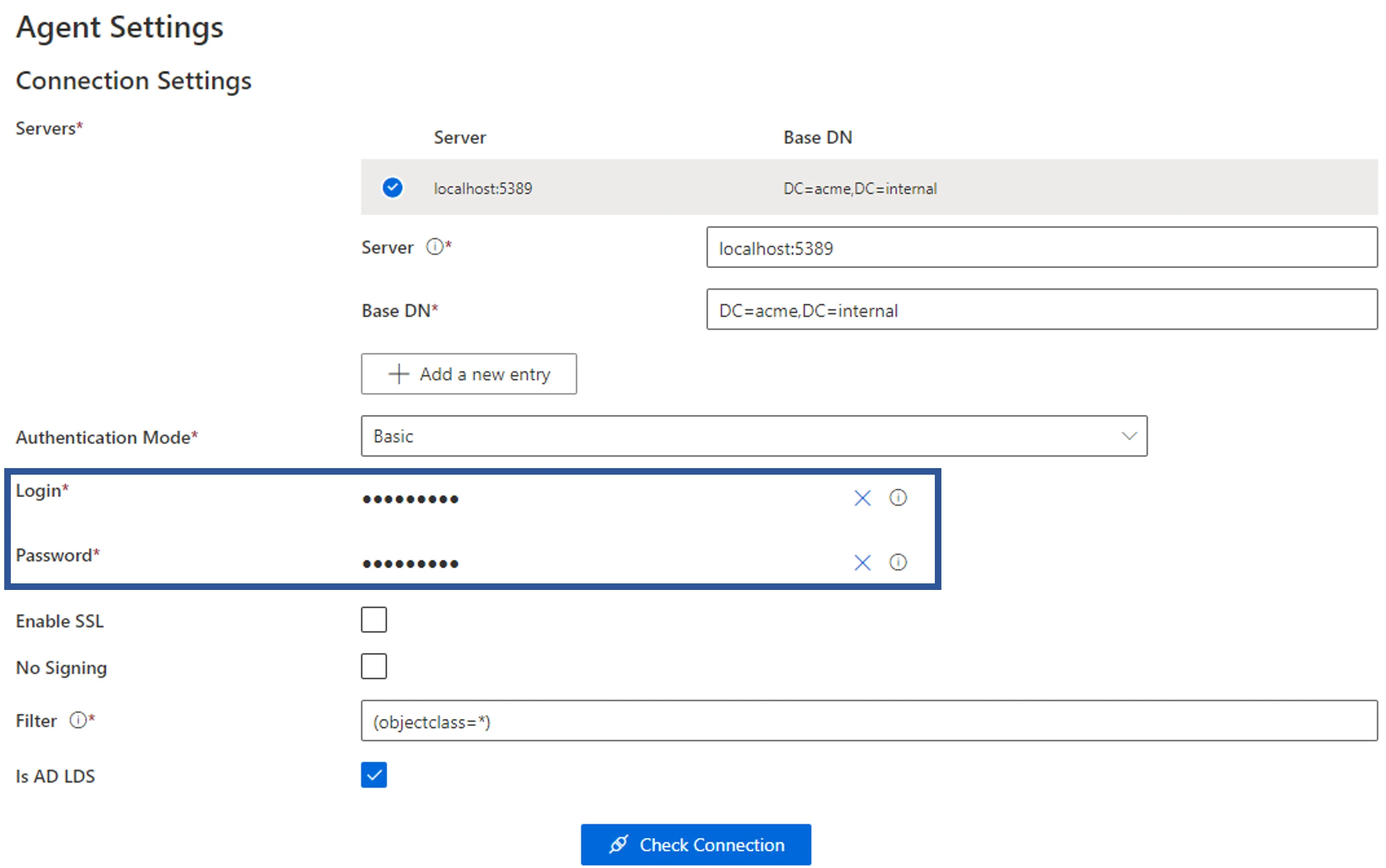

Among a connection's parameters, identify the secured option:

-

for a simple field:

-

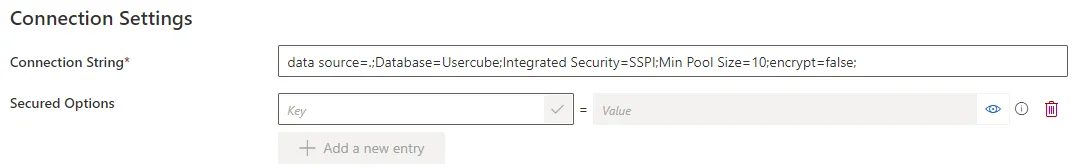

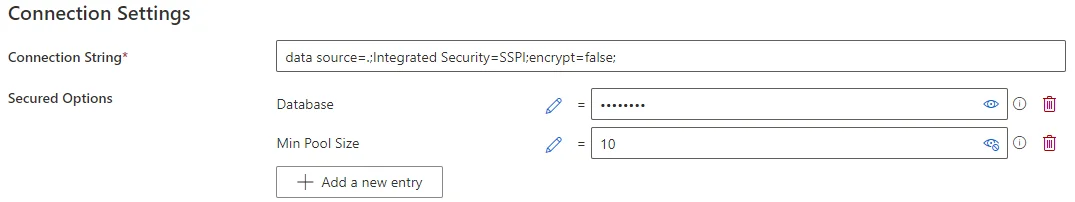

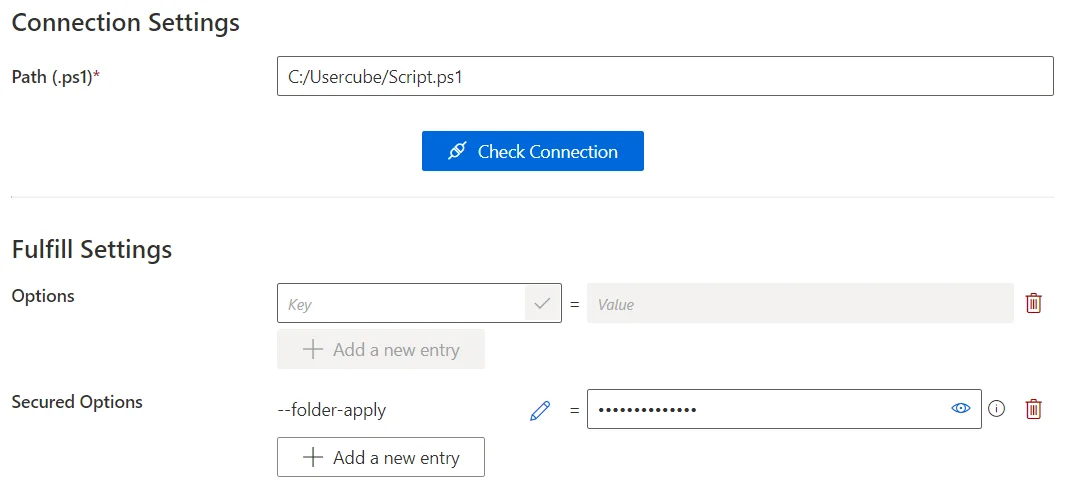

for multiple key-value fields:

Contrary to simple fields, multiple-key-value secured options are not restricted to a given property. They are arbitrary and can be set to anything.

-

-

Fill the field(s) and, if needed, click on the eye icon to make the content visible.

For example, for a simple field in an AD connection, the

LoginandPasswordare by default hidden with ??????:

For example, for multiple key-value fields in an SQL connection, some elements of the connection string might be sensitive and need to be hidden:

In this example, the database name and the minimal pool size are secured options:

Another example of multiple key-value fields in a Powershell connection:

-

Once saved, any secured option's value can no longer be seen. However, it can still be modified by deleting the value and re-specifying it.

Run the Banking Demo Application

This guide shows how to set up and run the Banking demo application.

Banking Application Description

The Banking application is a demo application that represents a web based external system. The Banking application contains:

-

A main page.

-

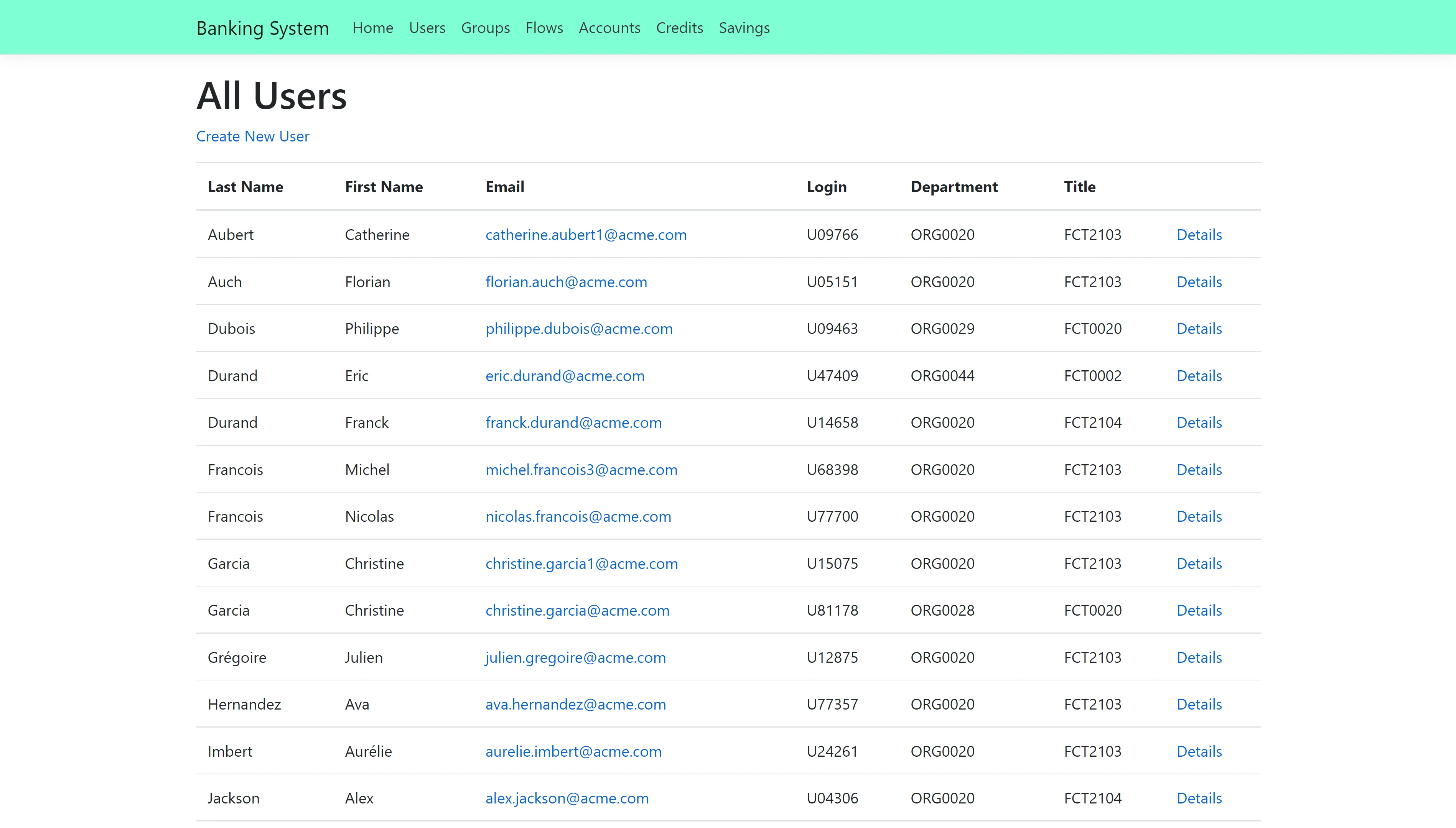

A list of users, accessible by clicking on Users at the top of the page. It is possible to add a user by clicking on Create New User.

-

A list of groups, accessible by clicking on Groups at the top of the page. Clicking on Details on a group shows the users belonging to that group.

-

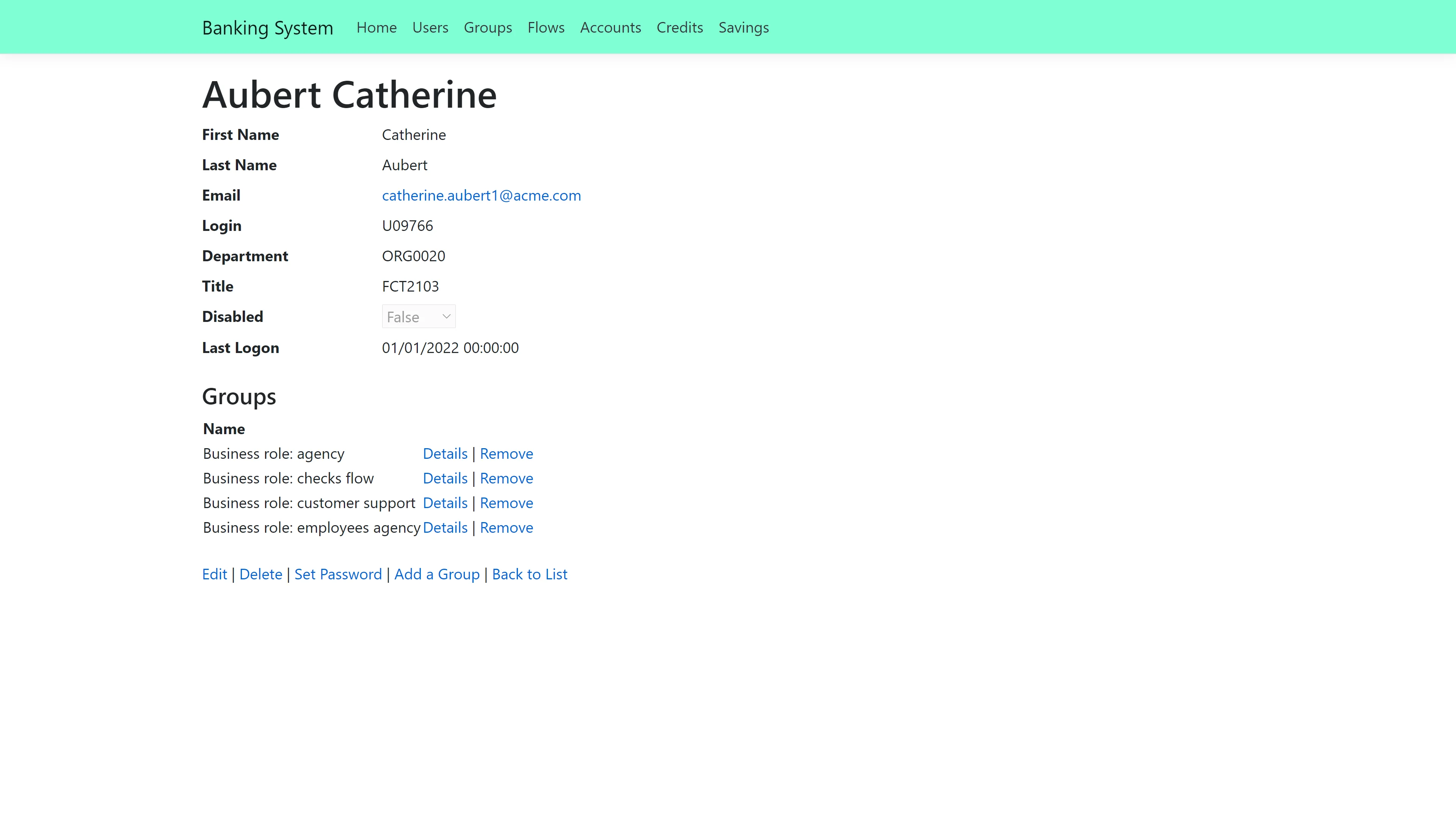

A user's details page for each user, accessible by clicking on Details on a user in the users list.

The most interesting part of the Banking application is a user's page. On a user's page, it is possible to:

- Edit the user's information

- Delete the user

- Add the user to a group

- Remove the user from a group

- Set the user's password

The Banking application uses a database named BankingSystem as a data source. The changes made to

a user are applied to the database, and will be saved.

Running the Banking Application

The Banking Application is part of the Usercube SDK, and comes with prefilled sources. To run the Banking application:

- Download the Usercube SDK.

- Download the Usercube runtime.

- Create a database named

BankingSystem. - Go to the

Runtimefolder. - Run

./identitymanager-FillBankingDatabase.exe --connection-string {connection string} --sources-path {sources path} --banking-sql-path {banking sql path}, replacing{connection string}with theBankingSystemdatabase connection string,{sources path}with the path toSDK/DemoApps/Sources, and{banking sql path}with the path toSDK/DemoApps/Banking. - Go to the

SDK/DemoApps/Bankingfolder. - Run

./Banking.exein a command prompt. - In a web browser, enter the URL

localhost:5000.

The Banking application is running, and the web browser is on the Banking home page.

To set the Banking application to another port, run

./Banking.exe --urls http://localhost:{port number}. To access the application, enter the URL

localhost:{port number} in a web browser.

Some ports are not recognized by web browsers, or may already be used. Choose a port wisely.

Run the HR Demo Application

This guide shows how to set up and run the HR demo application.

HR Application Description

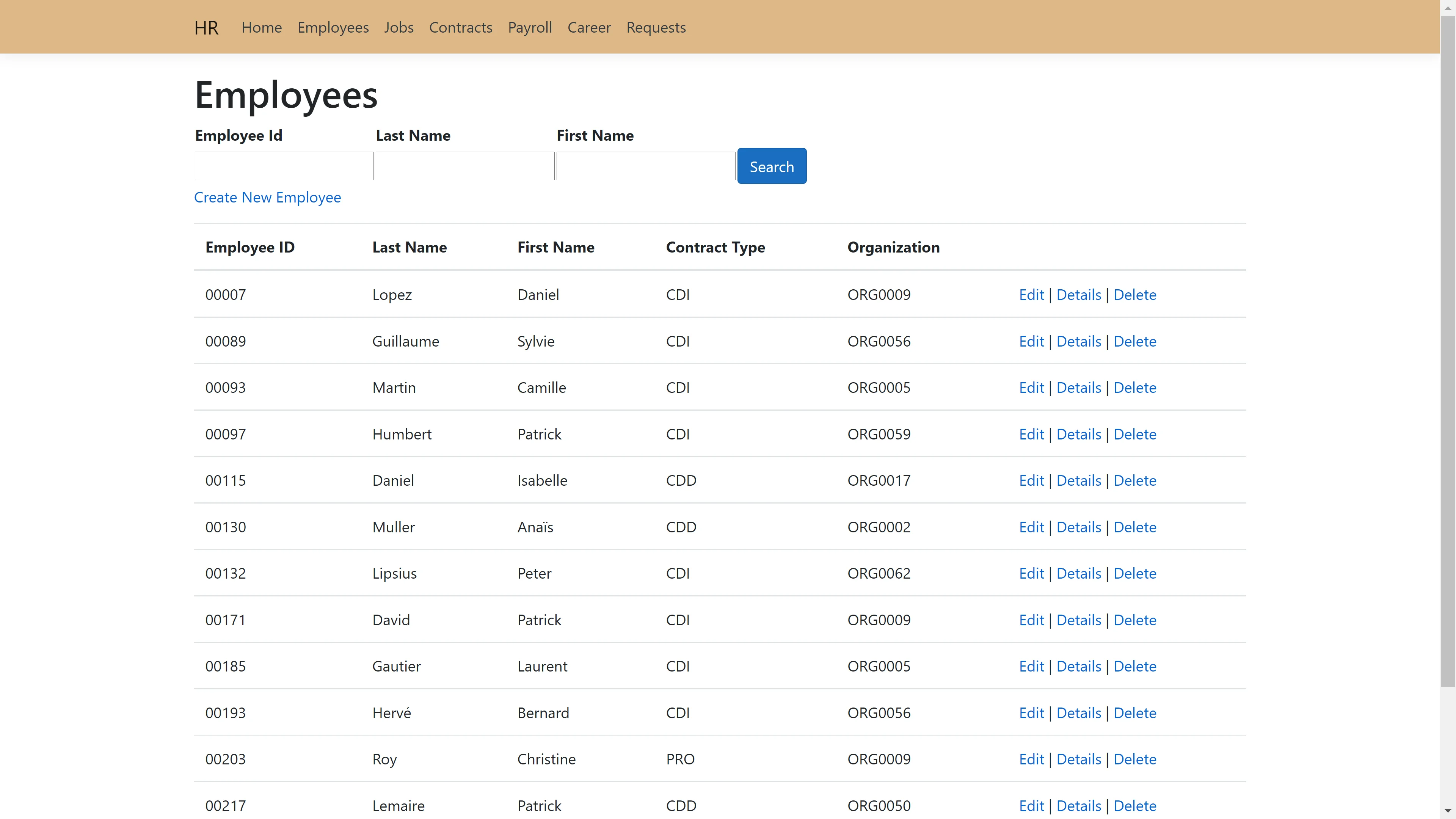

The HR application is a demo application that represents a web based external system. The HR application contains an employee list.

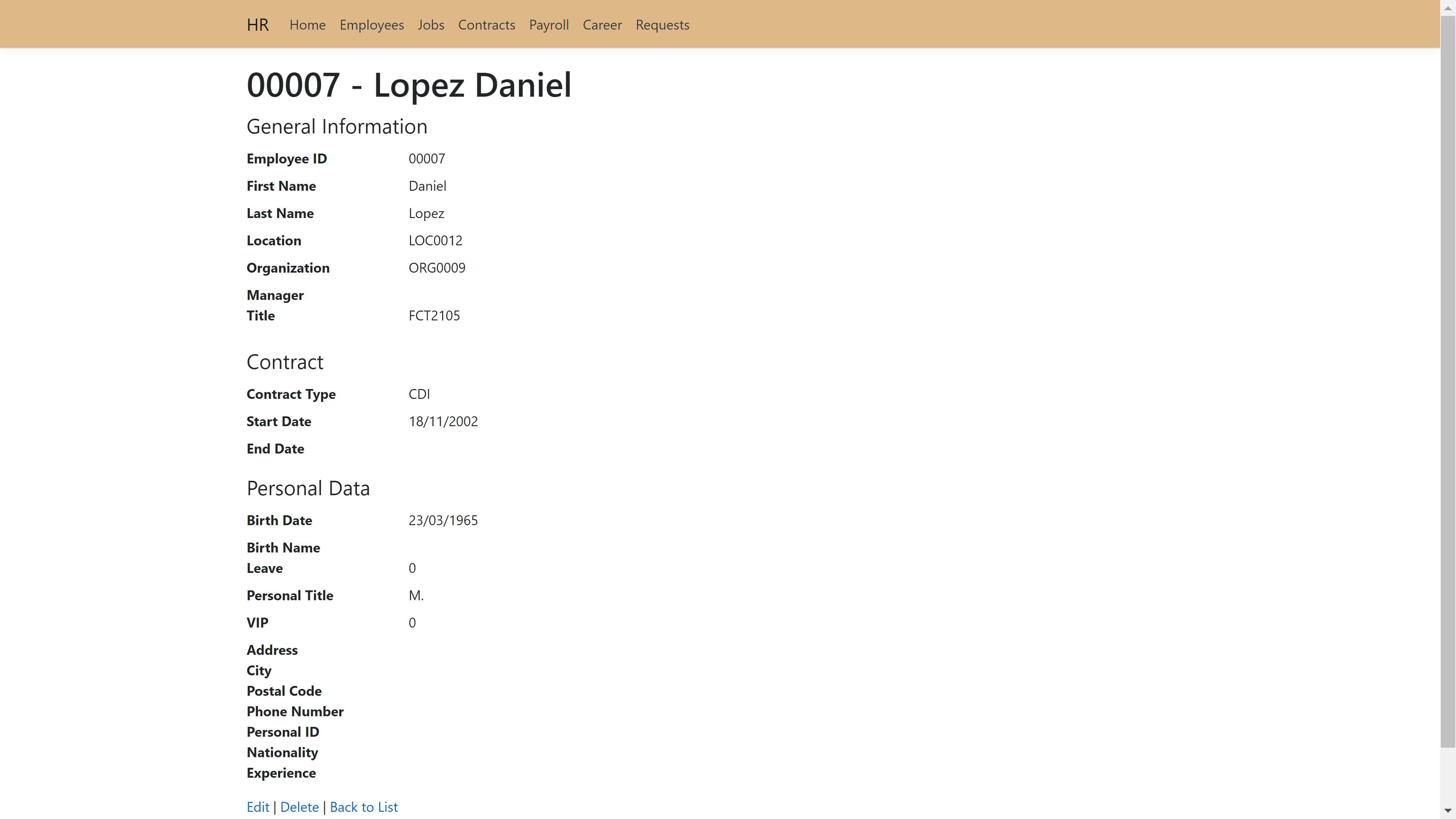

Each employee also has their own page, with the possibility to edit their profile or delete them. It is also possible to add a new employee.

The HR application uses csv files as data sources. When a user is added, deleted, or edited, the csv file will be modified, and the changes will be saved.

Running the HR Application

The HR Application is part of the Usercube SDK, and comes with prefilled sources. To run the HR application:

- Download the Usercube SDK.

- Go to

SDK/DemoApps/HR. - Modify appsettings.json > CSVPath to

"..\\Sources". - Run

./HR.exein a command prompt. - In a web browser, enter the URL

localhost:5000.

The HR application is running, and the web browser is on the HR application employee list.

To set the HR application to another port, run ./HR.exe --urls http://localhost:{port number}. To

access the application, enter the URL localhost:{port number} in a web browser.

Some ports are not recognized by web browsers, or may already be used. Choose a port wisely.

How-Tos

These guides will help you set up connectors with practical step-by-step procedures.

-

Run the Banking Demo Application

Set up the Banking demo application in order to test a connector with a web based external system.- #### Run the HR Demo Application Set up the HR demo application in order to test a connector with a web based external system.- #### Create a Connector How to implement a connector via XML to connect Usercube to an external system.- #### Register for Microsoft Entra ID Grant Usercube a service account with Microsoft Identity Platform with the right permissions to authenticate to Microsoft Entra ID.- #### Configure Secured Options Configure secured options to ensure data security in a connection's parameters.- #### Set Up Incremental Synchronization How to implement an incremental synchronization job for a given connector via XML, to upload the related system's resources to Usercube.- #### Write a Template for a Ticket Connector Write a template that will be used by a Ticket connector to complete the title and the description of the ticket.- #### Write a PowerShell Script for Synchronization Write a PowerShell script used by a PowerShellSync connector.- #### Write a PowerShell Script for Provisioning Write a PowerShell script used by a PowerShellProv connector.- #### Fulfill Microsoft Exchange via PowerShell Set up a PowerShell connector to fulfill data in a PowerShell-compliant system, here Microsoft Exchange Server.- #### Write a Robot Framework Script Write a Robot Framework script that will be used by the Robot Framework connector.- #### Interact with a Web Page via Robot Framework Write a Robot Framework script that interacts with a web based external system.- #### Interact with a GUI Application via Robot Framework Write a Robot Framework script which interacts with an external application.- #### Export CyberArk Data via SCIM Set up a SCIM connector to extract data from your CyberArk instance into CSV source files that will in turn be fed to the synchronization task and to Usercube's resource repository.- #### Provision Salesforce Users' Profiles via SCIM Provision a user's account profile in a Salesforce system with the SCIM connector.- #### Set up SharePoint's Export and Synchronization Set up a SharePoint connector to extract data from your SharePoint instance into CSV source files that will be fed to the synchronization task and to Usercube's resource repository.

Interact with a GUI Application via Robot Framework

This guide shows how to write a Robot Framework script which interacts with an external application.

Example: Interacting with an application via a GUI

Consider an external system that is accessible through a GUI program, and that does not offer an API. In this situation, we can either interact manually with the external system , or with a Robot Framework connection.

Prerequisites

This guide will focus only on how to interact with a GUI application. The guide on how to write a Robot Framework script explains the basics of Robot Framework. The basic prerequisites can be found on the Robot Framework connector page.

The requirements specific to the Robot Framework FlaUI library are as follows:

- Python 3.7 or 3.8. For Python 3.9, using

pip install wheelin the command prompt may solve installation errors. - Robot Framework FlaUI library: use

pip install --upgrade robotframework-flauiin the command prompt. - The application with the GUI.

Other Robot Framework libraries can interact with applications. The [desktop part of the zoomba library] can also interact with a program, but requires an appium server.

While not strictly required, it is highly recommended that the Robot Framework FlaUI library documentation be consulted.

Inspecting tools

Most FlaUI keywords require an XPath locator. These XPaths can be found using the FlaUI inspection

tool. Download the

FlaUI inspection tool zip archive, then extract the

files to a folder. The inspection tool can be launched simply by running FlaUIInspect.exe.

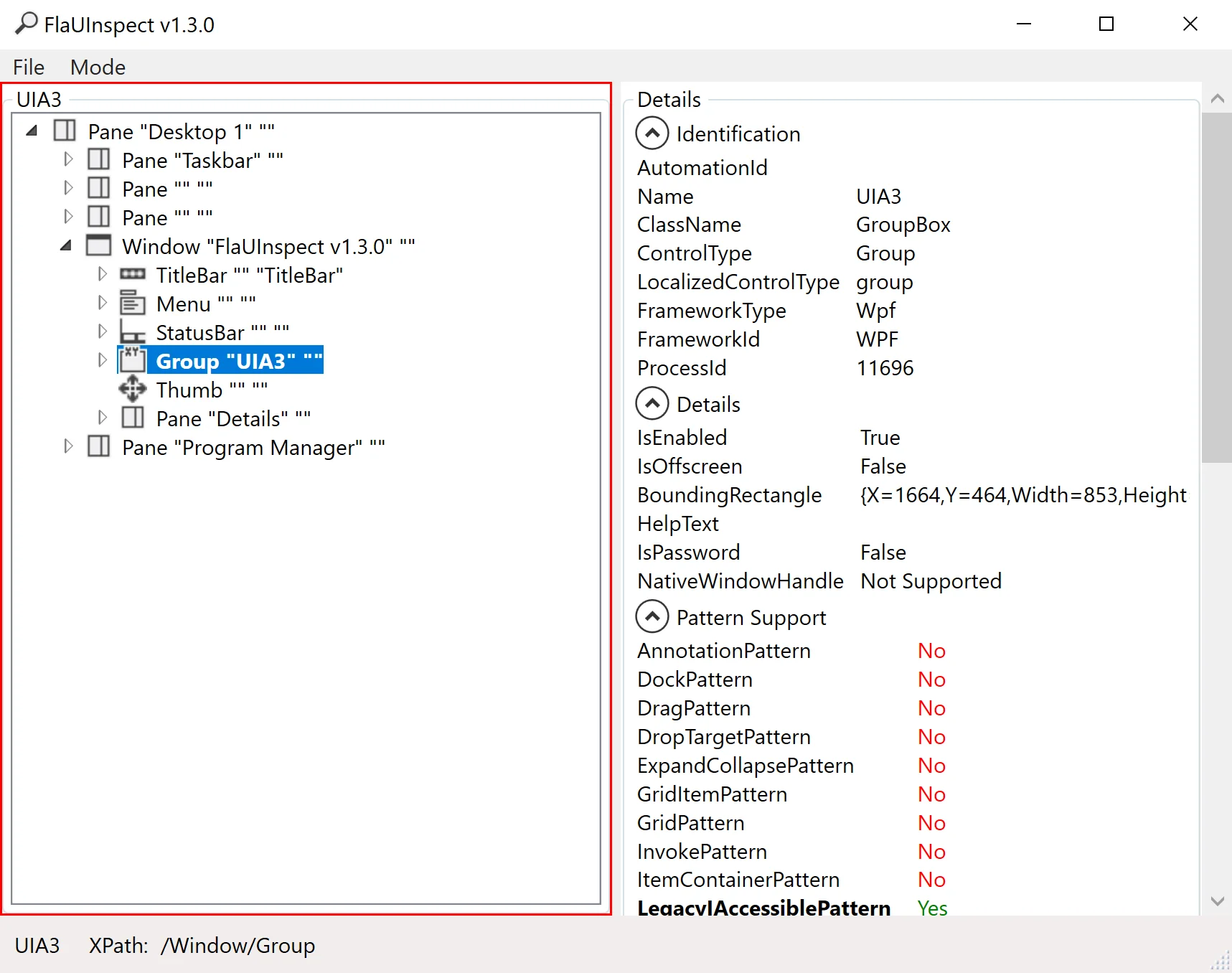

This tool lets you choose the UIA (UI Automation) version. Picking UIA3 should work in most use cases.

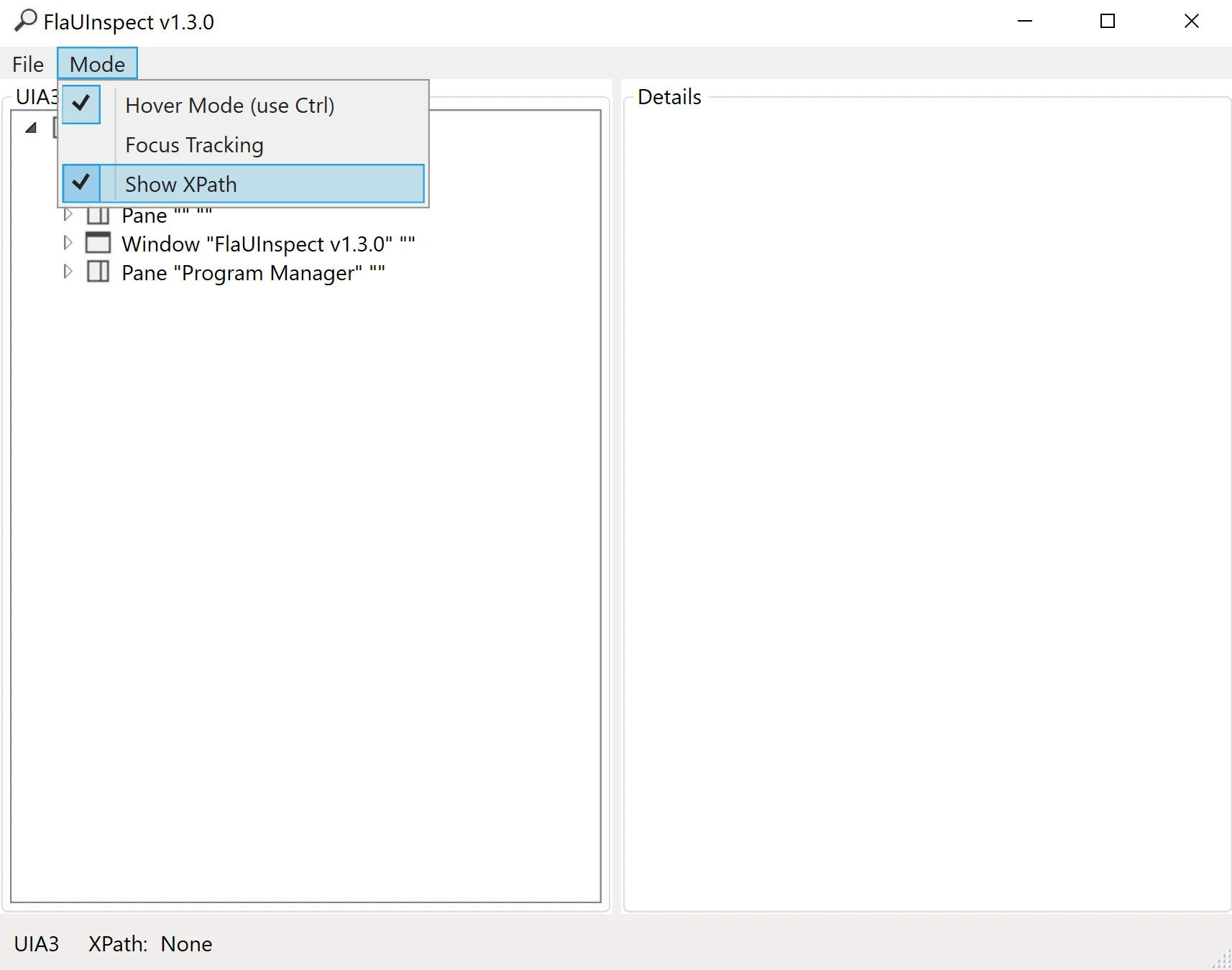

The FlaUI inspection tool shows each window that is open on the computer. To find the element the script is supposed to interact with, it is possible to manually search through the windows, and through the elements. However, the easiest way is to use the Hover Mode, which is accessible in the tool bar by clicking on Mode > Hover Mode (use Ctrl). To see the XPath, click on Mode > Show XPath.

To see the XPath of an element, hover over the element, and press control. A red box should appear around the element, and the FlaUI inspection tool should show the element's information. The XPath should be at the bottom left of the FlaUI element.

As an example, imagine an application showing a list of files and folders. Targeting a specific file

would produce an XPath in the shape of /Window/Pane[3]/Pane/Pane[2]/List/Group[1]/ListItem[1]. The

important parts of this path are the beginning and the end. The beginning of the XPath specifies the

window. The middle part of the XPath, in most cases, is irrelevant.

The last part of the XPath however, /Group[1]/ListItem[1], is what should be modified to find the

right file. Group[1] means the element is in the first file group. ListItem[1] means the element

is the first file of the group. Depending on the file explorer view mode, the XPath may end with

Edit[1], which means the targeted element is the name section of the file.

As the Window's number may change, it should be specified by name. For the Downloads folder,

Window[@Name='Downloads'] specifies the window. The file may not always be at the same position,

so it should also be specified. If the file is FlaUInspect.exe, it can be specified with

ListItem[@Name='FlaUInspect.exe']. The Group may also change. It is not easy to find the right

group, so the best method is to remove the groups, by right clicking, then selecting Group by >

(None).

Use Case: Set a file to read-only

Consider an HR system that creates a file for each employee. When an employee retires, it may be interesting to set the file to read-only, so that it is not modified by accident. It is possible to set the file to read-only by provisioning it with the Robot Framework.

Define settings

As with every other Robot Framework script, the Usercube Robot Framework resource needs to be imported to launch the provisioning. The FlaUI library also needs to be imported to use its keywords.

*** Settings ***

Resource C:/identitymanagerDemo/Runtime/identitymanagerRobotFramework.resource

Library FlaUILibrary

Define variables

The Variables section contains variables that are used in the rest of the script. As the section

is at the start of the script, the variables are easy to update. In this case, the folder's name and

path are important variables that may be changed.

*** Variables ***

${FOLDERNAME} RobotFrameworkIdentity

${FOLDERPATH} C:/identitymanagerDemo/${FOLDERNAME}

Define custom keywords

To modify a file's properties, the script needs custom keywords that allow the desired actions to be accomplished. In this case, to navigate through the explorer program. These keywords were written with the Windows 10 File Explorer in mind.

| Keyword | Details |

|---|---|

| Open Explorer | Opens and attaches the explorer program to FlaUI. A program can be attached to FlaUI by its name or by its Pid, which stands for process identifier. The Launch Application keyword returns a Pid, however the program may launch multiple processes. In the case of the explorer, it is almost always running, even if no explorer windows are open. The Pid returned may not be the correct one. Attaching by the program name seems to work in this case. |

| Open Folder | Opens the folder specified in the Variables section. Accessing the address bar is not trivial, as it is not a text field until it is clicked. However, clicking on most elements of the address bar does not open the text field. In this keyword, the icon in the address bar is clicked, which opens the text field. |

| Get File Name | Returns the file's name. This allows the computation of the file's name through a keyword instead of an expression, which can make syntax easier. |

| Set File To Read Only | Sets the file corresponding to the user to read only. This keyword calls the other keywords in the right order, and is used to simplify the readability of the script. |

| Open File Properties | Right clicks on a file, then opens the file's properties. The right click is on the file's image, but it could be changed to any of the file's fields. Note that changing the folder's view mode or ordering may alter the file's XPath. |

| Select Read Only | Selects the read only option. This keyword simply clicks on the radio button, then clicks on the Ok button. If the radio button is already ticked, the file will no longer be in read only mode. The script clicks on the Ok button as it automatically closes the properties window, unlike the Apply button. |

| Close Explorer | Clicks on the cross to close the explorer window. It is also possible to close the program with the Close Application keyword, however that also closes the background explorer process, so closing only the window is better. |

Open Explorer

Launch Application explorer

Attach Application By Name explorer

Open Folder

Open Folder

Click /Window[@Name='File Explorer']/Pane[2]/Pane[3]/ProgressBar/Pane/ToolBar/SplitButton

Set Text To Textbox /Window[@Name='File Explorer']/Pane[2]/Pane[3]/ProgressBar/ComboBox/Edit[@Name='Address'] ${FOLDERPATH}

Press Key s'ENTER'

Get File Name

[Arguments] ${order}

[return] ${order['Changes']['Identifier']}.txt

Set File To Read Only

[Arguments] ${order}

${FileName}= Get File Name ${order}

Open File Properties ${FileName}

Select ReadOnly ${FileName}

Open File Properties

[Arguments] ${filename}

Right Click /Window[@Name='${FOLDERNAME}']/Pane[3]/Pane/Pane[2]/List/ListItem[@Name='${filename}']/Image

Click /Menu[@Name='Context']/MenuItem[@Name='Properties']

Select Read Only

[Arguments] ${filename}

Click /Window[@Name='${filename} Properties']/CheckBox[@Name='Read-only']

Click /Window[@Name='${filename} Properties']/Button[@Name='OK']

Close Explorer

Click /Window[@Name='${FOLDERNAME}']/TitleBar/Button[@Name='Close']

Define mandatory keywords

To provision the system, the script must contain the three mandatory keywords: ExecuteAdd,

ExecuteDelete, and ExecuteModify. In this case, only ExecuteDelete is implemented. (It is

considered, perhaps foolishly, that employees will not come out of retirement!)

*** Keywords ***

ExecuteAdd

[Arguments] ${order}

Log To Console ExecuteAdd is not implemented

ExecuteDelete

[Arguments] ${order}

Set File To Read Only ${order}

ExecuteModify

[Arguments] ${order}

Log To Console ExecuteModify is not implemented

Define test cases

Although the Robot Framework is used for provisioning in Usercube, it is most often used for

testing, which is why the Test Cases section defines what should happen when Usercube starts the

Robot Framework task. The Launch Provisioning keyword is the one that will fetch the provisioning

orders.

*** Test Cases ***

Run Provisioning

Open Explorer

Launch Provisioning

Close Explorer

Read the full script

The full script is as follows:

*** Settings ***

Resource C:/identitymanagerDemo/Runtime/identitymanagerRobotFramework.resource

Library FlaUILibrary

*** Variables ***

${FOLDERNAME} RobotFrameworkIdentity

${FOLDERPATH} C:/identitymanagerDemo/${FOLDERNAME}

*** Keywords ***

ExecuteAdd

[Arguments] ${order}

Log To Console ExecuteAdd is not implemented

ExecuteDelete

[Arguments] ${order}

Set File To Read Only ${order}

ExecuteModify

[Arguments] ${order}

Log To Console ExecuteModify is not implemented

Open Explorer

Launch Application explorer

Attach Application By Name explorer

Open Folder

Open Folder

Click /Window[@Name='File Explorer']/Pane[2]/Pane[3]/ProgressBar/Pane/ToolBar/SplitButton

Set Text To Textbox /Window[@Name='File Explorer']/Pane[2]/Pane[3]/ProgressBar/ComboBox/Edit[@Name='Address'] ${FOLDERPATH}

Press Key s'ENTER'

Get File Name

[Arguments] ${order}

[return] ${order['Changes']['Identifier']}.txt

Set File To Read Only

[Arguments] ${order}

${FileName}= Get File Name ${order}

Open File Properties ${FileName}

Select ReadOnly ${FileName}

Open File Properties

[Arguments] ${filename}

Right Click /Window[@Name='${FOLDERNAME}']/Pane[3]/Pane/Pane[2]/List/ListItem[@Name='${filename}']/Image

Click /Menu[@Name='Context']/MenuItem[@Name='Properties']

Select Read Only

[Arguments] ${filename}

Click /Window[@Name='${filename} Properties']/CheckBox[@Name='Read-only']

Click /Window[@Name='${filename} Properties']/Button[@Name='OK']

Close Explorer

Click /Window[@Name='${FOLDERNAME}']/TitleBar/Button[@Name='Close']

*** Test Cases ***

Run Provisioning

Open Explorer

Launch Provisioning

Close Explorer

Interact with a Web Page via Robot Framework

This guide explains how to write a Robot Framework script that interacts with a web based external system.

Example: Interacting with a web-based application

Consider an external system that is accessible through a web interface, and that does not offer an API. In this situation, we can either interact manually with the external system , or with a Robot Framework connection.

Prerequisites

This guide will focus only on how to interact with a web-based application. The guide on how to write a Robot Framework script explains the basics of Robot Framework. The basic prerequisites can be found on the Robot Framework connector page.

The prerequisites are explained in detail at the Robot Framework selenium pypi page.

The requirements specific to the Robot Framework Selenium library are as follows:

- Robot Framework selenium library: use

pip install --upgrade robotframework-seleniumlibraryin the command prompt. - A web browser.

- A web driver that corresponds to the web browser and its version. Webdrivers can be found in the

Selenium website.

This web driver should be in your path. To check that the web driver is in your path, use

gcm {webdriver_name}. As an example for Edge, usegcm MicrosoftWebDriver.

The web driver for Edge is called msedgedriver.exe, but the Robot Framework may expect it to be

called MicrosoftWebDriver.exe depending on the python version. Renaming the web driver from

msedgedriver.exe to MicrosoftWebDriver.exe should fix this issue.

If the browser is updated, the web driver should also be updated.

While not strictly required, it is highly reccomended to look at the Robot Framework selenium library documentation.

Selenium basics

Selenium is a web browser automation tool. Selenium can automatically perform scripted actions in a web browser. Selenium is not easy to use on its own, and it is easier to use Selenium via the Robot Framework. However, the basics are still the same.

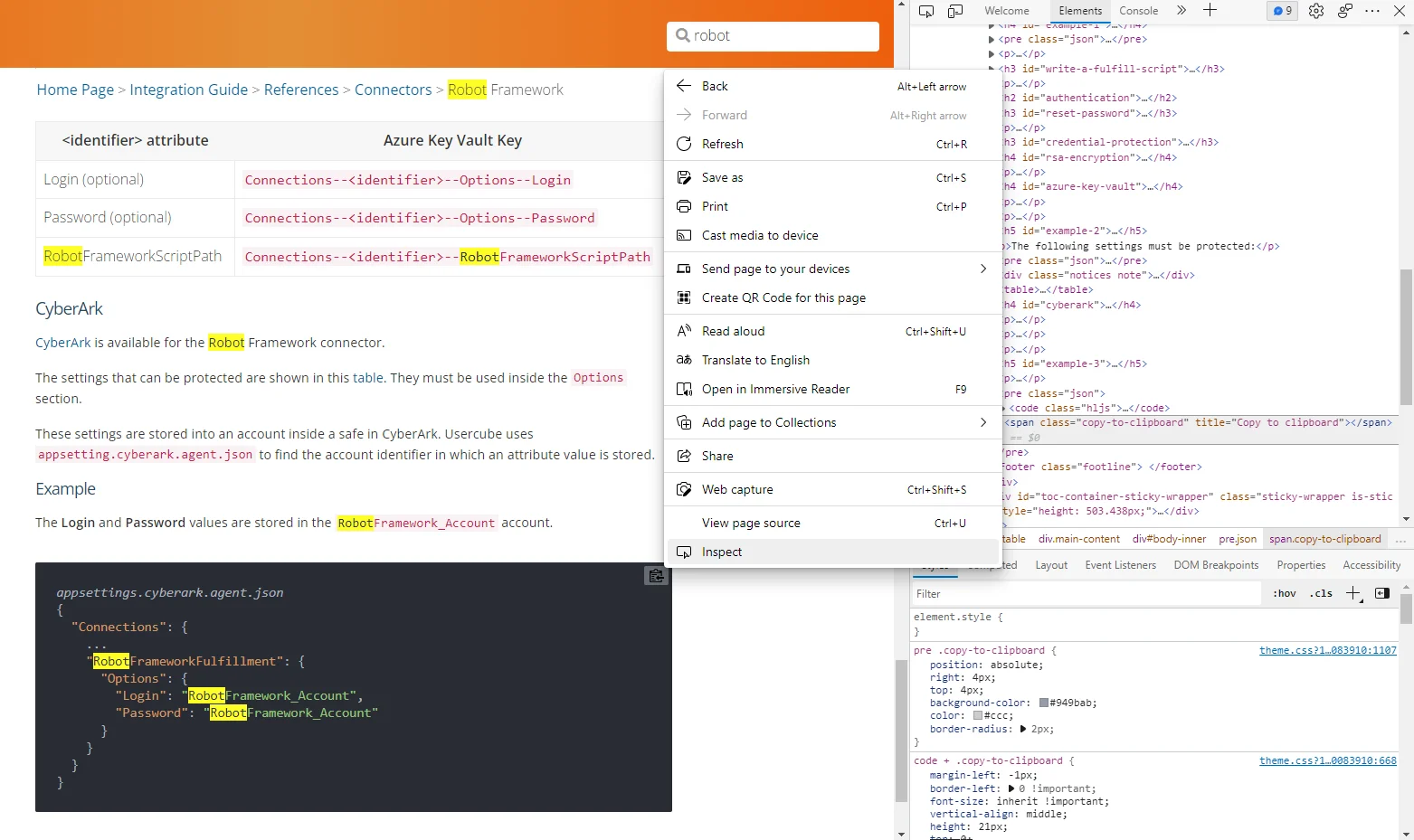

The basic structure of a web page is defined with HTML. It is accessible with the inspect tool, which can be opened by pressing the F12 key on most browsers. For Selenium, we want to find information on specific parts of the page. Inspecting an element can be done by right clicking the element, and clicking Inspect.

Suppose the goal of the script is to copy the content of the code block, and paste it to a file, to

ensure that the file is up to date with the documentation. To do this, the Robot Framework has to

click on the copy to clipboard button with the keyword

Click Element.

Locating elements

As stated in the Robot Framework SeleniumLibrary documentation, the keyword Click Element requires

an element locator. The element locator identifies which element the Robot Framework should click.

To ensure the right element is clicked, the element locator should only match the one element which

should be clicked.

In the HTML, the button has a class class="copy-to-clipboard". The element locator

class:copy-to-clipboard matches the button. However, there are other buttons with the same class

on the page. The easiest way to click the right button is with an XPath element locator.

XPath element locators

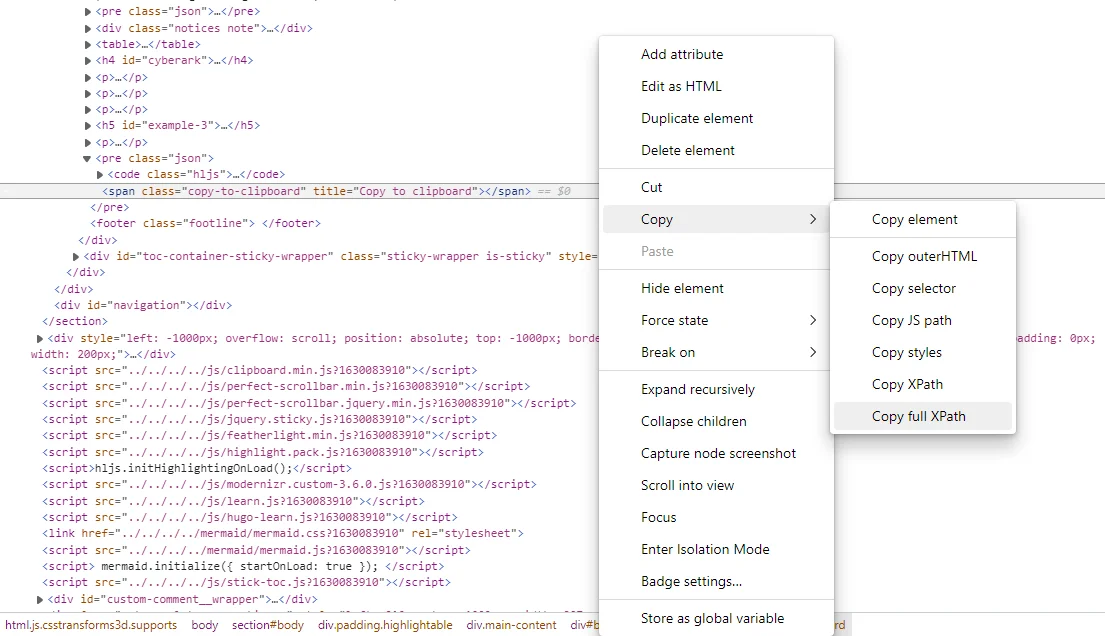

Each element on the web page has an XPath, and each XPath uniquely identifies an element. This means that we can always use an XPath locator. To get the XPath of an element, inspect the element, then right click it in the HTML, and click on Copy > Full XPath.

For the copy to clipboard button, the XPath is

/html/body/section/div[2]/div[3]/div[1]/pre[4]/span.

XPaths change as the page is updated. Using a location strategy other than the XPath strategy should reduce the maintenance needs of the script.

Hypertext references and API calls

Some elements have links to other websites or pages of the same website. In the HTML inspection,

these elements are likely to have a href attribute containing the link. Href stands for

hypertext reference. By going directly to the linked URL instead of clicking the link, the script

does not need to specify an element locator for the link.

In some cases, an API can be called simply by going to the right URL. This URL may be used as a

shortcut to avoid having to fill in text fields. The href attributes may show the format of the

API calls.

Use Case: Fulfill groups in a Banking system

The Banking system is a Usercube demo application that represents an external system. The Banking system stores basic information on its users such as their names, mail addresses� The most interesting part of the Banking system is the groups functionality, as users can belong to multiple groups, and groups can have multiple users.

The goal of this use case is to extract the existing associations between groups and users from the Banking system into Usercube, then provide a way to add users to a group and remove users from a group. To showcase the password generation, the script will generate a password for the provisioned users' accounts.

Connector configuration

As stated in the previous part, the Banking connector is supposed to link the users and their groups. This means that the connector has a user entity type, and a group entity type, with an entity association between them.

The Banking connector has to be able to extract the data, and fulfill the Banking system. The fulfillment of the Banking system can only be done through its web application, which means the Robot Framework Selenium library will be used. The extraction of the data will be performed through an SQL connection.

For simplicity's sake, only the user's Login is kept.

<Connector Identifier="Banking" DisplayName_L1="Banking" Agent="Local"/> <Category Policy="Default" Identifier="Banking" DisplayName_L1="Banking" /> <Connection Connector="Banking" DisplayName_L1="Banking Extract Database" Identifier="BankingExtractDatabase" Package="Usercube.SQL.SQLServer@0000001"/> <Connection Connector="Banking" DisplayName_L1="Banking Fulfillment" Identifier="BankingFulfillment" Package="Usercube.RobotFramework@0000001"/> <EntityType Identifier="Banking_Group" DisplayName_L1="Banking - Group" DisplayName_L2="Banking - Groupe"> <Property Identifier="name" DisplayName_L1="Group Name" IsKey="true" TargetColumnIndex="1" Type="String" /> <Property Identifier="users" DisplayName_L1="Users" Type="ForeignKey" /> </EntityType> <EntityType Identifier="Banking_User" DisplayName_L1="Banking - User" DisplayName_L2="Banking - Utilisateur"> <Property Identifier="login" DisplayName_L1="Login" IsKey="true" TargetColumnIndex="1" Type="String" /> <Property Identifier="groups" DisplayName_L1="Groups" Type="ForeignKey" /> </EntityType> <EntityTypeMapping Identifier="Banking_Group" Connector="Banking" ConnectionTable="BankingExtractDatabase_"dbo"."um_groups""> <Property Identifier="name" ConnectionColumn="name" IsPrimaryKey="true" /> </EntityTypeMapping> <EntityTypeMapping Identifier="Banking_User" Connector="Banking" ConnectionTable="BankingExtractDatabase_"dbo"."um_users""> <Property Identifier="login" ConnectionColumn="login" IsPrimaryKey="true" /> </EntityTypeMapping>

<EntityAssociation Identifier="Banking_Users_Groups" DisplayName_L1="User" IsProperty1Collection="true" IsProperty2Collection="true" Property1="Banking_Group:users" Property2="Banking_User:groups" /> <EntityAssociationMapping Identifier="Banking_Users_Groups" Column1="group" Column2="user" ConnectionTable="BankingExtractDatabase_"dbo"."um_usersgroups"" EntityPropertyMapping1="Banking_Group:name" EntityPropertyMapping2="Banking_User:login" Connector="Banking" /> <ViewTargetResourceTemplate EntityType="Banking_Group" Profile="Administrator" /> <ViewTargetResourceTemplate EntityType="Banking_User" Profile="Administrator" />

The notion of groups in the Banking system is replaced by the notion of single roles in Usercube. A user belonging to the accountant group in the Banking system has the accountant single role in Usercube. To automate the correspondance, the connector's configuration requires a rule between the group resource and the single role. This can be done with a navigation rule for each single role and corresponding group.

For simplicity's sake, only three roles are kept.

<SingleRole Identifier="BAGR09" DisplayName_L1="Banking role: all transactions" DisplayName_L2="M�tiers : op�rations toutes r�gions" Category="Banking" EntityType="Directory_User" Policy="Default" /> <SingleRole Identifier="BAG002" DisplayName_L1="Banking role: agency" DisplayName_L2="M�tiers : agence" Category="Banking" EntityType="Directory_User" Policy="Default" /> <SingleRole Identifier="BAG003" DisplayName_L1="Banking role: employees agency" DisplayName_L2="M�tiers : agence personnel" Category="Banking" EntityType="Directory_User" Policy="Default" /> <ResourceType Identifier="Banking_NominativeUser" DisplayName_L1="Banking User (nominative)" Policy="Default" TargetEntityType="Banking_User" Category="Banking" SourceEntityType="Directory_User" BlockProvisioning="false"> <ScalarRule Property="login" Expression="C#:person:return person.Login;" /> <NavigationRule Property="groups" Resource="Business role: all transactions" SingleRole="BAGR09" /> <NavigationRule Property="groups" Resource="Business role: agency" SingleRole="BAG002" /> <NavigationRule Property="groups" Resource="Business role: employees agency" SingleRole="BAG003" /> </ResourceType> <ResourceTypeMapping Identifier="Banking_NominativeUser" Connection="BankingFulfillment" /> <ResourceCorrelationRule ResourceType="Banking_NominativeUser" TargetBinding="login" Policy="Default" SourceMatchedConfidenceLevel="100" SourceExpression="C#:person:return "U" + person.EmployeeId;" />

Define settings

As with every other Robot Framework script, the resource needs to be imported to launch the provisioning. The SeleniumLibrary also needs to be imported to use its keywords.

*** Settings ***

Resource C:/identitymanagerDemo/Runtime/identitymanagerRobotFramework.resource

Library SeleniumLibrary

Define variables

The variables in the Variables section can serve two purposes.

- Values that should be modified easily: The browser and the Banking web application URL change with the provisioning environment.

- Values that are used multiple times: The Banking web application URL is used three times in the script. This avoids editing mistakes that happen when only one of the instances is modified.

*** Variables ***

${BROWSER} edge

${BANKINGURL} http://localhost:5011

Define custom keywords

The script defines several custom keywords. As the element locators may not be easily understandable, it is important that the keywords are not long, and have descriptive names.

| Keyword | Details |

|---|---|

| Modify User | Sets a password for the user, then applies the provisioning order. This keyword does everything the Execute Modify keyword should do, so that it can be used for error handling. As the provisioned resource type may not have password reset settings, the password generation could fail, which is why it is called by the Try Keyword keyword. |

| Restart Banking And Fail | Restarts the Banking Application, then fails the keyword execution. This keyword should be used when the Banking application is in an unknown state. |

| Launch Banking App | Launches the Banking web application. To check that the web browser is on the right page, the title of the page is verified with the Title Should Be keyword. |

| Set Password | Generates a password for the provisioned user, sets their Banking password to that password, then sends a notification. This keyword attempts to send the notification as soon as the password is set. First, this ensures that the notification is sent even if the rest of the script would crash. Second, this keeps the password in memory for the least amount of time possible, which reduces security risks. |

| Add Group To User | Selects the group that should be added, and clicks the Save button. This keyword also verifies that the web browser has the expected title. The Click Element At Coordinates keyword is used to reset the state of the page, as selecting the group hides the Save button. |

| Search User And Add Group | Goes to the page to add groups to the right user, and calls Add Group To User. This keyword also verifies that the web page has the expected title. |

| Add Groups | Calls Search User And Add Group for each group in the provisioning order. |

| Add All Groups | Computes the number of groups to add, and if there is at least one, calls Add Groups. The only way to find the number of groups to add is in the Changes > groups_add section of the provisioning order. This section does not exist if there are no groups to add, so the Run Keyword And Ignore Error is called to avoid propagating the error. |

| Remove Group From User | Goes to the URL corresponding to the API call to remove the group from the user. |

| Remove Groups | Calls Remove Group From User for each group in the provisioning order. |

| Remove All Groups | Computes the number of groups to remove, and if there is at least one, calls Remove Groups. The only way to find the number of groups to remove is in the Changes > groups_remove section of the provisioning order. This section does not exist if there are no groups to remove, so the Run Keyword And Ignore Error is called to avoid propagating the error. |

*** Keywords ***

Modify User

[Arguments] ${order}

Try Keyword Set Password ${order}

Catch Keyword Go To ${BANKINGURL}/User

Title Should Be All Users - Banking System

Add All Groups ${order}

Remove All Groups ${order}

Restart Banking And Fail

Close Browser

Launch Banking App

Fail ${Provisioning failed, restarting the browser}

Launch Banking App

Open Browser ${BANKINGURL} ${BROWSER}

Title Should Be Home Page - Banking System

Set Password

[Arguments] ${order}

Go To ${BANKINGURL}/User/SetPassword/${login}

Title Should Be Edit ${login} - Banking System

${password}= Generate Password

Input Text id:Password ${password}

Click Element xpath:/html/body/div/main/div[1]/div/form/div[2]/input

Send Password Notification

Add Group To User

[Arguments] ${groupName}

Select From List By Value name:group ${groupName}

Click Element at Coordinates name:group 250 0

Click Element xpath:/html/body/div/main/div[1]/div/form/div[2]/input

Title Should Be All Users - Banking System

Search User And Add Group

[Arguments] ${login} ${groupName}

Go To ${BANKINGURL}/User/AddGroup/${login}

Title Should Be Add Group to ${login} - Banking System

Add Group To User ${groupName}

Add Groups

[Arguments] ${order} ${length}

FOR ${i} IN RANGE ${length}

Search User And Add Group ${order['Resource']['login']} ${order['Changes']['groups_add'][${i}]['name']}

END

Add All Groups

[Arguments] ${order}

${status} ${length}= Run Keyword And Ignore Error Get Length ${order['Changes']['groups_add']}

Run Keyword If '${status}' == 'PASS' Add Groups ${order} ${length}

Remove Group From User

[Arguments] ${login} ${groupName}

Go To ${BANKINGURL}/User/RemoveGroup/${login}?groupId=${groupName}

Remove Groups

[Arguments] ${order} ${length}

FOR ${i} IN RANGE ${length}

Remove Group From User ${order['Resource']['login']} ${order['Changes']['groups_remove'][${i}]['name']}

END

Remove All Groups

[Arguments] ${order}

${status} ${length}= Run Keyword And Ignore Error Get Length ${order['Changes']['groups_remove']}

Run Keyword If '${status}' == 'PASS' Remove Groups ${order} ${length}

Define mandatory keywords

To be able to provision the system, the script must contain the ExecuteAdd, ExecuteDelete, and

ExecuteModify keyword. As the Banking system is only able to modify existing accounts, only the

Execute Modify keyword is implemented.

To simplify error handling, the Execute Modify keyword only calls the Modify User keyword. As

only a single keyword is needed, it can be called within the Try Keyword keyword. This means that

the error handling can be handled with the Catch Keyword keyword.

*** Keywords ***

ExecuteAdd

[Arguments] ${order}

Log To Console ExecuteAdd is not implemented

ExecuteDelete

[Arguments] ${order}

Log To Console ExecuteDelete is not implemented

ExecuteModify

[Arguments] ${order}

Try Keyword Modify User ${order}

Catch Keyword Restart Banking And Fail

Define test cases

Although the Robot Framework is used for provisioning in Usercube, it is most often used for

testing, which is why the Test Cases section defines what should happen when Usercube starts the

Robot Framework task. Note that the Launch Provisioning keyword is mandatory for the provisioning

to happen.

As the browser should always be closed after the tests, a teardown is used to ensure that regardless of the script's execution state, the browser is closed.

*** Test Cases ***

Run Provisioning

Launch Banking App

Launch Provisioning

[Teardown] Close Browser

Read the full script

The full script is as follows:

*** Settings ***

Resource C:/identitymanagerDemo/Runtime/identitymanagerRobotFramework.resource

Library SeleniumLibrary

*** Variables ***

${BROWSER} edge

${BANKINGURL} http://localhost:5011

*** Keywords ***

ExecuteAdd

[Arguments] ${order}

Log To Console ExecuteAdd is not implemented

ExecuteDelete

[Arguments] ${order}

Log To Console ExecuteDelete is not implemented

ExecuteModify

[Arguments] ${order}

Try Keyword Modify User ${order}

Catch Keyword Restart Banking And Fail

Modify User

[Arguments] ${order}

Try Keyword Set Password ${order}

Catch Keyword Go To ${BANKINGURL}/User

Title Should Be All Users - Banking System

Add All Groups ${order}

Remove All Groups ${order}

Restart Banking And Fail

Close Browser

Launch Banking App

Fail ${Provisioning failed, restarting the browser}

Launch Banking App

Open Browser ${BANKINGURL} ${BROWSER}

Title Should Be Home Page - Banking System

Set Password

[Arguments] ${order}

Go To ${BANKINGURL}/User/SetPassword/${login}

Title Should Be Edit ${login} - Banking System

${password}= Generate Password

Input Text id:Password ${password}

Click Element xpath:/html/body/div/main/div[1]/div/form/div[2]/input

Send Password Notification

Add Group To User

[Arguments] ${groupName}

Select From List By Value name:group ${groupName}

Click Element at Coordinates name:group 250 0

Click Element xpath:/html/body/div/main/div[1]/div/form/div[2]/input

Title Should Be All Users - Banking System

Search User And Add Group

[Arguments] ${login} ${groupName}

Go To ${BANKINGURL}/User/AddGroup/${login}

Title Should Be Add Group to ${login} - Banking System

Add Group To User ${groupName}

Add Groups

[Arguments] ${order} ${length}

FOR ${i} IN RANGE ${length}

Search User And Add Group ${order['Resource']['login']} ${order['Changes']['groups_add'][${i}]['name']}

END

Add All Groups

[Arguments] ${order}

${status} ${length}= Run Keyword And Ignore Error Get Length ${order['Changes']['groups_add']}

Run Keyword If '${status}' == 'PASS' Add Groups ${order} ${length}

Remove Group From User

[Arguments] ${login} ${groupName}

Go To ${BANKINGURL}/User/RemoveGroup/${login}?groupId=${groupName}

Remove Groups

[Arguments] ${order} ${length}

FOR ${i} IN RANGE ${length}

Remove Group From User ${order['Resource']['login']} ${order['Changes']['groups_remove'][${i}]['name']}

END

Remove All Groups

[Arguments] ${order}

${status} ${length}= Run Keyword And Ignore Error Get Length ${order['Changes']['groups_remove']}

Run Keyword If '${status}' == 'PASS' Remove Groups ${order} ${length}

*** Test Cases ***

Run Provisioning

Launch Banking App

Launch Provisioning

[Teardown] Close Browser

Export CyberArk Data via SCIM

This guide shows how to set up a SCIM connector to extract data from your CyberArk instance into CSV source files that will in turn be fed to the Synchronization task and to your Usercube resource repository. It will focus on registering Usercube within the target CyberArk instance, configuring the connector, and building the job to perform regularly scheduled synchronization.

Prerequisites

External system configuration

Usually CyberArk provides the environment to use AAM (Application Access Manager) and SCIM (System for Cross-domain Identity Management). For example, PrivateArk Server, PrivateArk and other tools can be found on a VM-based environment.

It is strongly recommended that you follow the official CyberArk SCIM Server Implementation Guide (the CyberArk team can provide this document) in order to set up the environment. When you've completed the installation or if CyberArk has already installed it, you can verify the installation:

-

Log into PrivateArk Client, locate and open the SCIM Config safe.

-

Check the presence of the following objects:

Encryption-key: The SCIM Server uses a local cache to store objects retrieved from the Vault. Although no credentials (other than the ones in the SCIM Config safe, which are not stored on the cache) are retrieved, we encrypt the cache with this encryption key. The key is randomly generated, and not exposed by the installer, but can be changed if desired.GlobalConfig.yml: This is the configuration file for the overall SCIM server settings. It is responsible for the setting of performance parameters and additional added features.Usercube-account: This is a privileged account to allow Usercube to authenticate its REST API requests to the SCIM Server. The password for this account must be the same as the Usercube-user (Usercube can be replaced by any other name like �Client').SCIM-account: This is a privileged account, managed by the Central Policy Manager (CPM is the module of the PAM tool that is responsible for managing the passwords and any policies/exceptions configured), which allows the SCIM server to retrieve the password for SCIM-user through an Application Identity Manager (AIM) Credential Provider call.

-

Verify that the following Users were created in the PrivateArk Client:

-

Go to Tools > Administrative Tools.

-

Select Users and Groups.

-

Ensure the following users have been created:

SCIM-user: This is a CyberArk user with full privileges for creating and managing Safes, Accounts, Permissions, and Users. This user is required by the CyberArk's Command Line Interface (PACLI, used to perform quick Vault-level functions without logging in to the PrivateArk client) on the SCIM server for logging into the Vault and managing objects on behalf of client applications such as Usercube.Client-user: This is a CyberArk user for authenticating requests made to the SCIM server using the REST API. (The name �Client-user' can change and be replaced by �Usercube-user' for example.)

Now we can consider that the installation is correct, the login is

Usercube-userand the passwordCyberArk1. -

Usercube configuration

This step sets up the Usercube Agent to use the SCIM connector and access the CyberArk data.

The settings must be entered in the appsettings.agent > Connections section.

Connect to the target CyberArk instance

In the Connections section, add one new subsection that will contain the credentials for the

target CyberArk. Use a meaningful name to remember which CyberArk is accessed via this section.

This example connects via the

SCIMCyberArkExportconnection to the CyberArk system:appsettings.agent.json

{

...

"Connections": {

...

"SCIMCyberArkExport": {

...

}

}

}

Input credentials

In the newly created subsection, fill in:

- The Server attribute with the CyberArk's address. It has the form:

https://host:port/CyberArk/scim. - The Login attribute with the User's login value (in our example,

Usercube-user). - The Password attribute with the User's login value (in our example,

Cyberark1).

For example:

appsettings.agent.json

{

...

"Connections": {

...

"SCIMCyberArkExport": {

"Server": "https://host:port/CyberArk/scim",

"Login": "Usercube-user",

"Password": "Cyberark1"

}

}

}

For pedagogical reasons, this guide focuses on the simplest way to set up the export, but it's not the most secure. Hence it is strongly recommended that you protect credentials using Azure Key Vault or CyberArk in a production environment. NETWRIX recommends completing this guide once, testing the configuration, and only then, switching to a more secure way of storing credentials.

Set exported objects, exported attributes and export files

This step focuses on choosing and setting up the list of SCIM objects and attributes to be exported.

The Filter attribute defines what is exported. It is located in the

appsettings.agent > Connections > SCIMCyberArkExport subsection previously created.

Choose objects to export

The list of objects to export depends on the Role Model requirements. The list will evolve iteratively as the project's needs become clearer.

The SCIM entities available in a CyberArk implementation are:

- Users: CyberArk Users.

- Containers: Containers/CyberArk Safes.

- ContainerPermissions: Permissions on CyberArk Safes.

- Privileged Data: Privileged Data/CyberArk Accounts.

- Groups: CyberArk Groups.

Filters are defined in the next part.

Filtering

An exhaustive list of entities and attributes provided by CyberArk is available in their

technical documentation

or the SCIM Swagger UI.

The Filter and FilterGroup setting syntax is detailed in the

SCIM optional attributes.

SCIMSyntax must also be set to CyberArk because the CyberArk system doesn't strictly follow all

the SCIM rules at the moment.

Example

The following example sets up the Users, ContainerPermissions, Containers and Groups for export.

For Users, we give an example for each type of attribute:

- userName is an attribute of the base schema.

- ldapFullDN is an attribute of the

urn:ietf:params:scim:schemas:cyberark:1.0:Userschema because it is separated by�. - givenName is a sub-attribute of the attribute

namebecause it is separated by:.

Notice the * that separates the entities.

appsettings.agent.json

{

...

"Connections": {

...

"SCIMCyberArkExport": {

"Server": "https://host:port/CyberArk/scim",

"Login": "Usercube-user",

"Password": "Cyberark1",

"Filter": "Users;urn:ietf:params:scim:schemas:cyberark:1.0:User�ldapFullDN|ldapDirectory id userName active name:givenName|middleName|familyName emails:value phoneNumbers:value title profileUrl source nativeIdentifier*ContainerPermissions;id user:value group:value container:value rights*Containers;id displayName type name",

"FilterGroup": "Groups;id displayName",

"SCIMSyntax": "CyberArk"

}

}

}

Set up export files

The export generates CSV source files that will be fed to the Synchronization task.

The SCIM connector generates one file per entity, the name is generated as: EntryFile + '_' +

FilterEntity or MembersFile + '_' + FilterGroupEntity.

Moreover, SyncCookiesFile can be specified to indicate the location of the cookie file for an

incremental export.

For more details, see SCIM optional attributes.

The target directory and file name are chosen freely. However, NETWRIX strongly recommends using the

working directoryTemp/ExportOutput

folder and choosing file names that start with the CyberArk_ prefix.

Example

With the following example, the resulting files are:

C:/identitymanagerDemo/Temp/ExportOutput/CyberArk_Users.csvC:/identitymanagerDemo/Temp/ExportOutput/CyberArk_ContainerPermissions.csvC:/identitymanagerDemo/Temp/ExportOutput/CyberArk_Containers.csvC:/identitymanagerDemo/Temp/ExportOutput/CyberArk_members_Groups.csv

appsettings.agent.json

{

...

"Connections": {

...

"SCIMCyberArkExport": {

"Server": "https://host:port/CyberArk/scim",

"Login": "Usercube-user",

"Password": "Cyberark1",

"Filter": "Users;urn:ietf:params:scim:schemas:cyberark:1.0:User�ldapFullDN|ldapDirectory id userName active name:givenName|middleName|familyName emails:value phoneNumbers:value title profileUrl source nativeIdentifier*ContainerPermissions;id user:value group:value container:value rights*Containers;id displayName type name",

"FilterGroup": "Groups;id displayName",

"EntryFile": "C:/identitymanagerDemo/Temp/ExportOutput/CyberArk",

"MembersFile": "C:/identitymanagerDemo/Temp/ExportOutput/CyberArk_members",

"SCIMSyntax": "CyberArk"

}

}

}

Every file contains the data as CSV, with one column per attribute.

Build the Connector

Declare a connector

To be used for export tasks, a connector must be declared in the applicative configuration and linked to an Agent.

It is strongly recommended that the applicative configuration be stored in the

working directoryConf

folder as a set of xml files organized by connector.

-

In the

Conffolder, create aSCIMCyberArkdirectory. -

In the

SCIMCyberArkdirectory create aCyberArk Connector.xmlfile.This file contains the declaration of the connector and the associated Entity Model.

-

Use the

<Connector>element to declare the connector with the following attributes:- Identifier identifies this connector in the

applicative configuration.

We recommend using a meaningful name such as

CyberArk. If several connections to several CyberArk targets are possible, only one CyberArk Connector per Agent is used. - DisplayName_Li, i ? [1..16] are used in the UI.

- Agent is the identifier of the Agent that will run this connector's export task. The

Agent's identifier can be found in the agent's

appsettings.agent> OpenId > AgentIdentifier.

- Identifier identifies this connector in the

applicative configuration.

We recommend using a meaningful name such as

-

Don't forget the

<?xml>and<ConfigurationFile>elements (see example below).

This example declares the

CyberArkconnector on theLocalagent:Conf/SCIMCyberArk/CyberArk Connector.xml

<?xml version="1.0" encoding="utf-8"?><ConfigurationFile xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns="urn:schemas-usercube-com:configuration"> ...

<Connector Identifier="CyberArk" DisplayName_L1="CyberArk" Agent="Local" /> <Connection Identifier="SCIMCyberArkExport" Connector="CyberArk" DisplayName_L1="CyberArk" Package="Usercube.SCIM.CyberArk@0000001" /> ...

</ConfigurationFile>

Build the entity model

The exported data to be written to the resource repository must be aligned with the Entity Model.

The Entity Model should match as closely as possible the structure of the CyberArk data relevant for Usercube. It is designed by analyzing the CyberArk data structure, and describing said data with Entity Types and Entity Associations. Eventually, it is up to the integration team to design the Entity Model that best serves the Role Model needs. It will most likely be refined iteratively throughout the project integration.

A good starting point for the Entity Model is to mirror the shape of the exported CyberArk SCIM objects. This guide provides a few examples that can serve this purpose. Thus, CyberArk SCIM objects such as Users and Groups can be described by Entity Types, and group membership by Entity Associations.

The Entity Model

for the CyberArk connector is written in the

applicative configuration.

It is strongly recommended to write the entity model to the newly created

Conf/SCIMCyberArk/CyberArk Connector.xml file.

Write entity types

Declaring an

Entity Type is

achieved with the <EntityType> tag and the following attributes:

- Identifier is the entity type's name. It must be unique among the entity types. It is strongly

recommended to prefix this name with the connector's name. An example for CyberArk is

CyberArk_User. - DisplayName_Li, i ? [1..16] are used in the UI to identify this

Entity Type for

the end-user. DisplayName_L1 is the name of the entity type in language number one. If this

language is English, a good example value would be

CyberArk - User.

Example

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityType Identifier="CyberArk_User" DisplayName_L1="CyberArk - User"> ...

</EntityType>...

The CyberArk SCIM objects attributes are modeled by

Entity Properties,

with the <Property> tags declared as children of the <EntityType>.

Remember that there are several kinds of

properties

(determined by the TargetColumnIndex): scalar and navigation.

- Scalar properties can be defined to represent scalar attributes such as

userName,activeorgivenName. - Navigation properties represent associations such as group memberships.

Finally, the main attributes of the <Property> tag are the following:

- Identifier identifies the property with a mandatory unique name. It must be unique among the entity properties for this entity type.

- DisplayName_Li, i ? [1..16] are used in the UI.

- Type defines the type of property. A scalar property type can be:

String,Bytes,Int16,Int32,Int64,DateTime,Bool,Guid,Double,Binary,Byte, orOption. The navigation property type isForeignKey. - TargetColumnIndex defines in which column of the resource table the property is stored. See

more details at

TargetColumnIndex.

Example

This example defines an entity type named CyberArk_User to match the attributes selected for

extraction from CyberArk in the previous example.

Notice the omitted TargetColumnIndex attribute and the presence of Type="ForeignKey" for the

groups and containers properties. If omitted, this attribute indicates that the properties are

navigation properties.

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityType Identifier="CyberArk_User" DisplayName_L1="CyberArk User" DisplayName_L2="Compte CyberArk"> <Property Identifier="CyberArk_id" DisplayName_L1="Id" IsKey="true" TargetColumnIndex="0" Type="String" /> <Property Identifier="userName" DisplayName_L1="User Name" TargetColumnIndex="6" Type="String" /> <Property Identifier="active" DisplayName_L1="Active" TargetColumnIndex="7" Type="String" /> <Property Identifier="givenName" DisplayName_L1="First Name" TargetColumnIndex="8" Type="String" /> <Property Identifier="middleName" DisplayName_L1="Middle Name" TargetColumnIndex="9" Type="String" /> <Property Identifier="familyName" DisplayName_L1="Last Name" TargetColumnIndex="10" Type="String" /> <Property Identifier="emails" DisplayName_L1="Emails" TargetColumnIndex="11" Type="String" /> <Property Identifier="phoneNumbers" DisplayName_L1="Phone Numbers" TargetColumnIndex="12" Type="String" /> <Property Identifier="title" DisplayName_L1="Title" TargetColumnIndex="13" Type="String" /> <Property Identifier="profileUrl" DisplayName_L1="Profile Url" TargetColumnIndex="14" Type="String" /> <Property Identifier="dn" DisplayName_L1="DN" TargetColumnIndex="15" Type="String" /> <Property Identifier="source" DisplayName_L1="Source" TargetColumnIndex="16" Type="String" /> <Property Identifier="ldapFullDN" DisplayName_L1="LdapFullDN" TargetColumnIndex="17" Type="String" /> <Property Identifier="ldapDirectory" DisplayName_L1="LdapDirectory" TargetColumnIndex="18" Type="String" /> <Property Identifier="groups" DisplayName_L1="Groups" Type="ForeignKey" /> <Property Identifier="containers" DisplayName_L1="containers" Type="ForeignKey" /></EntityType>...

Write entity associations

Entity Types are associated through their navigation properties with Entity Association elements.

Example

The following example declares an n-n association between a CyberArk_User and CyberArk_Group.

The groups property of a CyberArk_User is a collection of Group IDs (modeled as an

CyberArk_Group EntityType) of which this CyberArk_User is a member.

The Users property of a CyberArk_Group is a collection of CyberArk_UserIDs which are members

of this Group.

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityAssociation Identifier="CyberArk_Group_Members" DisplayName_L1="Group Members" IsProperty1Collection="true" Property1="CyberArk_Group:Users" IsProperty2Collection="true" Property2="CyberArk_User:groups" />...

The exact nature of the IDs are described by the associated EntityAssociationMapping.

Notice the format of the Property1 and Property2 xml attributes: the name of the entity type

followed by : and the name of an entity property. It is a

binding

that describes in one expression both the target entity type and property.

Create mapping

The entity type must be mapped property by property to the exported attributes of CyberArk SCIM objects (namely, the columns of the CSV source files generated by the export).

The EntityTypeMapping, EntityAssociationMapping, and EntityPropertyMapping elements serve this purpose.

Write the entity type mapping

The EntityTypeMapping element maps scalar properties from the CSV source file to an entity type.

The CSV source file path is written to the ConnectionTable xml attribute. The target entity type name is written to the Identifier xml attribute.

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityTypeMapping Identifier="CyberArk_User" Connector="CyberArk" ConnectionTable="SCIMCyberArkExport_Users" > ...

</EntityTypeMapping>...

To do so, the entity type mapping uses the

EntityPropertyMapping

element with the <Property> tag. This maps the CSV column from ConnectionColumn to the target

EntityType property which is written to the Identifier attribute.

Example

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityTypeMapping Identifier="CyberArk_User" Connector="CyberArk" ConnectionTable="SCIMCyberArkExport_Users" > <Property Identifier="CyberArk_id" ConnectionColumn="id" IsPrimaryKey="true" /> <Property Identifier="userName" ConnectionColumn="userName" /> <Property Identifier="active" ConnectionColumn="active" /> <Property Identifier="givenName" ConnectionColumn="name:givenName" /> <Property Identifier="middleName" ConnectionColumn="name:middleName" /> <Property Identifier="familyName" ConnectionColumn="name:familyName" /> <Property Identifier="emails" ConnectionColumn="emails:value" IsMultiValuedProperty="true" /> <Property Identifier="phoneNumbers" ConnectionColumn="phoneNumbers:value" IsMultiValuedProperty="true" /> <Property Identifier="title" ConnectionColumn="title" /> <Property Identifier="profileUrl" ConnectionColumn="profileUrl" /> <Property Identifier="ldapDirectory" ConnectionColumn="ldapDirectory" /> <Property Identifier="ldapFullDN" ConnectionColumn="ldapFullDN" /> <Property Identifier="source" ConnectionColumn="source" ScimSchema="urn:ietf:params:scim:schemas:pam:1.0:LinkedObject"/> <Property Identifier="dn" ConnectionColumn="nativeIdentifier" ScimSchema="urn:ietf:params:scim:schemas:pam:1.0:LinkedObject" /> </EntityTypeMapping>...

As a result, after synchronization, the UR_Resource table will be updated from the CSV source

files data.

Let's take the example of a new CyberArk_User which has never been synchronized. The UR_Resource

table receives a new line for which the 6th column (userName) is filled in with the userName

column from the C:/identitymanagerDemo/Temp/ExportOutput/CyberArk_Users.csv file.

Write the entity association mapping

The EntityAssociationMapping element maps navigation properties, used in EntityAssociation.

An EntityAssociationMapping element refers to an EntityAssociation written to the Identifier xml attribute. Then, just as the EntityTypeMapping element, it maps columns values from a CSV source file to an EntityType property.

Example

The following example describes the actual user/group associations between CyberArk_User and

CyberArk_Group. These associations are exported from the CyberArk system into the

C:/identitymanagerDemo/Temp/ExportOutput/CyberArk_members_Groups.csv file. Each line of the file

associates a value (property CyberArk_id from CyberArk_Group) and a MemberId (property

CyberArk_id from CyberArk_User).

| value | MemberId |

|---|---|

| 1 | 100 |

| 1 | 101 |

| 2 | 102 |

| 2 | 103 |

| 3 | 104 |

The following

EntityAssociationMapping

describes the mapping for the CyberArk_Group_Members EntityAssociation:

Conf/SCIMCyberArk/CyberArk Connector.xml

...

<EntityAssociation Identifier="CyberArk_Group_Members" DisplayName_L1="Group Members" IsProperty1Collection="true" Property1="CyberArk_Group:Users" IsProperty2Collection="true" Property2="CyberArk_User:groups" /><EntityAssociationMapping Identifier="CyberArk_Group_Members" Column1="value" EntityPropertyMapping1="CyberArk_Group:CyberArk_id" Column2="MemberId" EntityPropertyMapping2="CyberArk_User:CyberArk_id" Connector="CyberArk" ConnectionTable="SCIMCyberArkExport_members_Groups" />...

Here are a few explanations:

Users/CyberArk_Group

The Users property in the CyberArk_Group entity:

- is written to the Property1 attribute of the

CyberArk_Group_MembersEntityAssociation element. - is filled in by values from the

MemberIdcolumn (written to the Column2 attribute of theCyberArk_Group_MembersEntityAssociationMapping element) in theC:/identitymanagerDemo/Temp/ExportOutput/CyberArk_members_Groups.csvfile.

These values identify resources of type CyberArk_User by their CyberArk_id property (written to

the EntityPropertyMapping2 attribute of the

EntityAssociationMapping

element.

Groups/CyberArk_User

The Groups property in the CyberArk_User entity:

- is written to the Property2 attribute of the

CyberArk_Group_MembersEntityAssociation element). - is filled in by values from the value column (written to the Column1 attribute of the

CyberArk_Group_MembersEntityAssociationMapping element) in theC:/identitymanagerDemo/Temp/ExportOutput/CyberArk_members_Groups.csvfile.

These values identify resources of type CyberArk_Group by their CyberArk_id property (written to

the EntityPropertyMapping1 attribute of the

EntityAssociationMapping

element).

Display

This step focuses on configuring a nice display for the synchronized list of resources in the UI.

Navigation

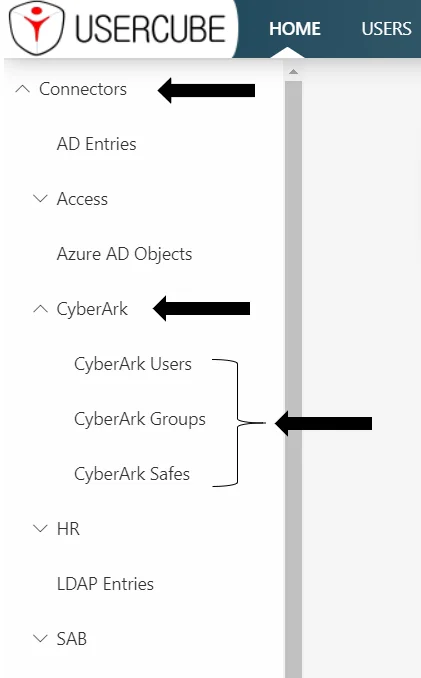

A MenuItem can be added to include a link to the resources list in the left menu in the UI home screen.

Parent menu item

It strongly recommended to gather synchronized resources menu items under parent menu items. This is

usually declared in the configuration root folder Nav.xml file.

Example

Conf/Nav.xml

...

<MenuItem Identifier="Nav_Connectors" DisplayName_L1="Connectors" DisplayName_L2="Connecteurs" ParentMenuItem="Nav" />...

Child menu item

It is strongly recommended to use a new CyberArk Nav.xml file in the SCIMCyberArk connector's

folder in order to add the CyberArk SCIM objects menu item.

Example

Conf/SCIMCyberArk/CyberArk Nav.xml

...

<MenuItem Identifier="Nav_Connectors_CyberArk" DisplayName_L1="CyberArk" DisplayName_L2="CyberArk" ParentMenuItem="Nav_Connectors"> <MenuItem Identifier="Nav_Connectors_CyberArk_User" DisplayName_L1="CyberArk Users" DisplayName_L2="Comptes CyberArk" EntityType="CyberArk_User" /> <MenuItem Identifier="Nav_Connectors_CyberArk_Group" DisplayName_L1="CyberArk Groups" DisplayName_L2="Groupes CyberArk" EntityType="CyberArk_Group" /> <MenuItem Identifier="Nav_Connectors_CyberArk_Container" DisplayName_L1="CyberArk Safes" DisplayName_L2="Safes CyberArk" EntityType="CyberArk_Container" /></MenuItem>...

Adds a new menu item under the Nav_Connectors menu item declared in the root Nav.xml file. This

new menu item gives access to the list of synchronized CyberArk SCIM objects.

Configuration

It is strongly recommended that the display configuration be written to a new CyberArk UI.xml file

in the SCIMCyberArk connector's folder.

Display entity type

The DisplayEntityType describes how a single resource should be displayed.

Example

Conf/SCIMCyberArk/CyberArk UI.xml

...

<DisplayEntityType Identifier="CyberArk_User"> <Property OutputType="BasicCollection" Identifier="groups" /> <Property OutputType="BasicCollection" Identifier="containers" /></DisplayEntityType>...

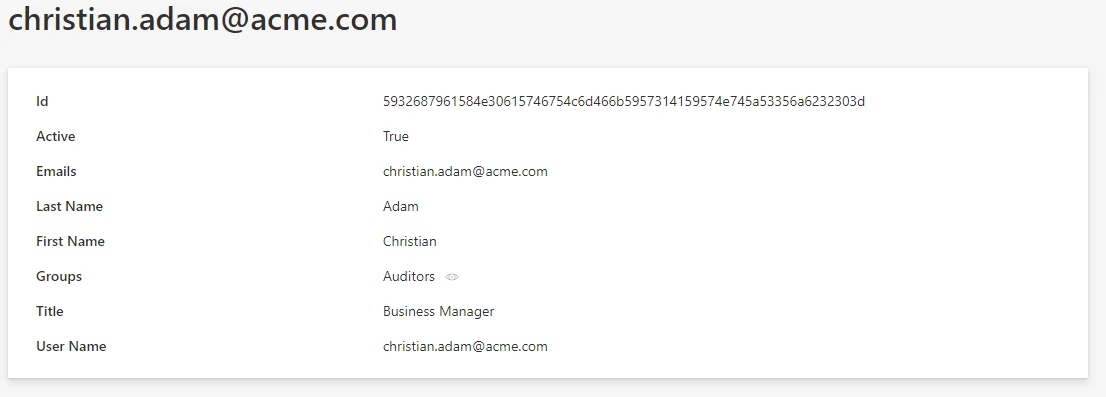

This configuration configures that display for christian.adam@acme.com:

The scalar properties don't need to be configured: they are automatically displayed. The only

information that the

DisplayEntityType

adds here, is that the property BasicCollection is a navigation property. An eye icon will be

displayed to take you directly to the matching page.

Display table

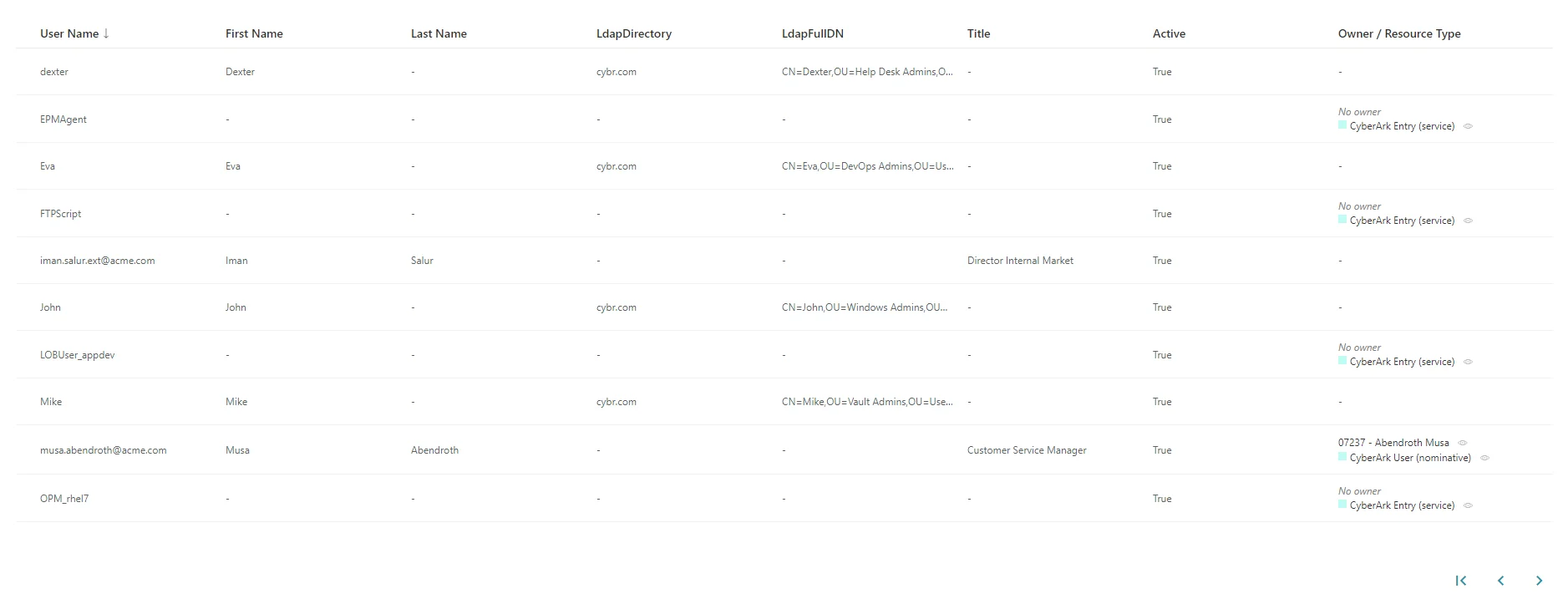

The DisplayTable elements describe how a list of resources should be displayed.

The DisplayTable contains a list of DisplayTableColumn elements that identify which properties should be included in the list display.

Example

Conf/SCIMCyberArk/CyberArk UI.xml

...

<DisplayTable Identifier="CyberArk_User" EntityType="CyberArk_User" DisplayTableDesignElement="resourcetable" IsEntityTypeDefault="true"> <Column DefaultSortPriority="1" DisplayBinding="userName" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="givenName" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="familyName" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="ldapDirectory" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="ldapFullDN" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="title" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /> <Column DisplayBinding="active" IsDisplayInSummaryView="true" IsResizable="true" IsSortable="true" CanBeFiltered="true" ColumnSize="2" /></DisplayTable>...

configures the following list display:

Internal display name

An InternalDisplayName can also be declared as an

EntityPropertyExpression.

The InternalDisplayName is used in several UI screens to identify a resource for the user.

With no custom InternalDisplayName, a default value is used (instead of the first property of the

identity) containing the string "name". If no such property is found, the first declared property

of the entity type is used.

Example

Conf/SCIMCyberArk/CyberArk UI.xml

...

<EntityPropertyExpression Identifier="CyberArk_User_InternalDisplayName" Expression="C#:resource:return resource.userPrincipalName ?? resource.mail ?? resource.displayName ?? resource.Id.ToString();" EntityType="CyberArk_User" Property="InternalDisplayName" />...

adds the InternalDisplayName to the CyberArk_User entity type to be used by the UI.

Permissions

This step focuses on setting up permissions for Usercube's end-users granting them access to the connector.

The AccessControlRule and AccessControlEntry elements define permissions for end-user profiles to read and write the connector's data (such as resources of a given entity type). It used by the UI when displaying data such as resources and available roles.

It is strongly recommended that permissions be written to a new file. For example, the administrator

profile permissions can be written to the CyberArk Profile Administrator.xml file.

Example

The following example sets permissions for the Administrator profile.

It entitles an administrator to display CyberArk SCIM resource and role categories from the UI.

Conf/MicrosoftEntraID/MicrosoftEntraID Profile Administrator.xml

...

<AccessControlRule Profile="Administrator" EntityType="ResourceType" Identifier="Administrator_ResourceTypeSelector_resourceType_CyberArk" DisplayName_L1="Administrator_ResourceTypeSelector_resourceType_CyberArk"> <Entry Permission="/Custom/Resources/CyberArk_User/View" CanExecute="true" /> <Entry Permission="/Custom/Resources/CyberArk_Group/View" CanExecute="true" /> <Entry Permission="/Custom/Resources/CyberArk_Container/View" CanExecute="true" /></AccessControlRule><AccessControlRule Profile="Administrator" EntityType="Category" Identifier="Administrator_ResourceTypeSelector_category_CyberArk" DisplayName_L1="Administrator_ResourceTypeSelector_category_CyberArk"> <Entry Permission="/Custom/Resources/CyberArk_User/View" CanExecute="true" /> <Entry Permission="/Custom/Resources/CyberArk_Group/View" CanExecute="true" /> <Entry Permission="/Custom/Resources/CyberArk_Container/View" CanExecute="true" /></AccessControlRule>...

Jobs

Construction

This step focuses on writing a Complete Synchronization job.

It is strongly recommended to write Jobs associated with the CyberArk connector to the

Conf/SCIMCyberArk/SCIM CyberArk Jobs.xml file.

Components

All the job steps can be found in the

CreateConnectorSynchroComplete scaffolding.

Example

Conf/SCIMCyberArk/SCIM CyberArk Jobs.xml

...

<CreateConnectorSynchroComplete Connector="CyberArk" DisplayName_L1="01: CyberArk - Synchronization Complete (scaffolding)" JobIdentifier="CyberArk_Synchronize_Complete_Scaffolding"> <OpenIdIdentifier Identifier="Job"/></CreateConnectorSynchroComplete>...

This job will be executed on CyberArk's connector agent.

Notice the Identifier attribute with the value Job in the OpenIdIdentifier tag. It refers to

the ClientId written to the

appsettings.agent

technical configuration. The Tasks will authenticate with the profile associated with this

ClientId in the <OpenIdClient> xml configuration element.

Incremental synchronization can be configured with the following scaffolding.

Permissions

The execution of a Job entails execution of Tasks, reading/writing to the Database and sending files over to the Server. These operations are protected by an authorization mechanism.

To complete a Job, the Agent, via the Usercube-Invoke-Job tool, uses:

- a

Profile

associated with the Job itself to read/write:

UJ_JobsandUJ_Taskstables in a list of tasksUJ_JobInstancestables in the progress report

- a Profile for each Task, to read/write

UJ_TaskInstancestables (Progress Report) and perform other operations such as sending export files over to the Server.

Each Profile must be assigned the right permissions for the associated Job or Task to perform.

Every request from Agent to Server within the execution of a Job needs to be authenticated with an Open Id Connect ClientId/Secret pair, linked to a Profile.

Create a profile

Here, we focus on creating one profile, used by the Job and every Task of the Job.

Conf/Profile AgentJob.xml

...

<Profile Identifier="AgentSynchro" DisplayName_L1="Agent Synchro" />...

As the Principle of Least Privilege states, NETWRIX strongly recommends that you create a Profile to be used during the Synchronization jobs which will be different from the one used during the Provisioning job. This contributes to separating access rights. The same principle applied even more rigorously would make Usercube create one profile per Task. It isn't necessary as most Synchronization tasks require the same permissions.

Grant synchronization access rights to the profile

For an Agent to launch server-side Tasks from the Job via the Usercube-Invoke-Job tool, the profile linked to these tasks and used by the tool should be authorized to execute said tasks.

Server-side Tasks for a simple Synchronization job usually are:

- Prepare-Synchronization

- Synchronization

Required permissions are:

View Tasks

/Jobs/Task/Query

Progress Report

/Jobs/JobInstance/Query/Jobs/JobInstance/Update/Jobs/TaskInstance/Query/Jobs/TaskInstance/update

Synchronization and Prepare-Synchronization

/Connectors/Connector/Query/Connectors/SynchronizeSession

Granting access can be done via the Synchronization AccessControlRules scaffolding and the JobViewAccessControlRules scaffolding.

The following examples (or similar) should be written to Conf/Profile AgentSychro.xml.

This example entitles the administrator profile to run any synchronization job:

Conf/Profile AgentSychro.xml

...

<SynchronizationAccessControlRules Profile="AgentSynchro"/><JobViewAccessControlRules Profile="AgentSynchro"/>...

Grant end-users permissions to run jobs from the UI

In addition, for end-users to be able to launch a job from the UI, they must be assigned a profile with the following access rights:

/Jobs/RunJob/Launch

This can be done via the JobExecutionAccessControlRules scaffolding.

Example

Conf/Profile AgentSychro.xml

...

<JobExecutionAccessControlRules Profile="AgentProfileForSynchro"/>...

Declare usable ClientId/Secret pairs in the configuration

An Agent's

Profile

is associated with a ClientId/Secret pair used by the Agent to authenticate to the Server.

Usable ClientId/Secret pairs are written to the database from the xml configuration using the

<OpenIdClient> xml element.

It is strongly recommended that you write the <OpenIdClient> xml element to a new or existing

OpenIdClients.xml file in the configuration root folder.

The ClientId/Secret pair hence created must be associated with the profile created or updated in

the previous step, via the Profile attribute.

Example

The following example creates a ClientId/Secret pair to be used by the Agent to authenticate to

the Server and complete Jobs. The secret is hashed with the

Usercube-New-OpenIDSecret

tool.

Conf/OpenIdClients.xml

...

<OpenIdClient Identifier="Job" HashedSecret="K7gNU3sdo+Op8wNhqoVWhr5v6s1xYv72ol/pe/Unols=" DisplayName_L1="ClientId for Jobs" DisplayName_L2="ClientId pour les jobs" Profile="Administrator" />

...

Set up the Agent to use ClientId/Secret pairs

The ClientId/Secret pairs that the Agent may use are written to the Agent's

appsettings.agent

technical configuration set.

The ClientId of such ClientId/Secret pairs can then be used as a value in a Task

OpenIdClient attribute.

Pairs written in the OpenIdClient section may be used by Tasks.

The Job itself uses the DefaultOpenIdClient value.

This example sets the "Job/secret" pair to be used by tasks and jobs:

appsettings.agent.json

{

...

"OpenId":{

"OpenIdClients": {

"Job": "secret"

},

"DefaultOpenIdClient": "Job"

}

}

Job launch

Scheduling the job execution can rely either on Usercube's scheduler or an external scheduler.

With Usercube's scheduler

Use the Job

CronTabExpression

attribute.

This example uses Usercube's scheduler to execute the

CyberArk_Synchronize_Complete_Manuallyjob every fifteen minutes:Conf/SCIMCyberArk/SCIM CyberArk Jobs.xml

<Job Identifier="CyberArk_Synchronize_Complete_Manually" CronTabExpression="*/15 * * * *" DisplayName_L1="01: CyberArk - Synchronization Complete (manually)" DisplayName_L2="00: CyberArk - Synchronisation Complete (manuelle)" Agent="Local"> ...

</Job>

For more details about checking Crontab expressions, see the crontab.guru website.

With an external scheduler

An external scheduler would rely on the Usercube-Invoke-Job tool.

Example

The following command can be scheduled. It executes the CyberArk_Synchronize_Complete_Manually

using the "Job/secret" authentication pair to connect to the Usercube Server at

http://identitymanager.contoso.com.

./identitymanager-Invoke-Job.exe -j "CyberArk_Synchronize_Complete_Manually" --api-secret secret --api-client-id Job --api-url "http://identitymanager.contoso.com"

Validation

Deploy configuration

The configuration is written to the database using the Deploy Configuration tool.

Test

The Synchronization job should be found in the UI, under the Job Execution menu, with the name input in the Job's DisplayName_Li attribute.

From there, it can be launched and debugged (if needed).

After execution, CyberArk SCIM Objects resources should be in the UR_Resources table of the SQL

Server database.

Provision Salesforce Users' Profiles via SCIM

This guide shows how to provision a user's account profile in a Salesforce system with the SCIM connector.

Prerequisites

This guide supposes that you already have a working synchronization for Salesforce users' accounts, entitlements and links between accounts and entitlements.

Context

In Salesforce, both profiles and permission sets are in the Entitlements entity, whereas they

are not managed the same way: a user account must have a profile and only one, while it can have

zero to several permission sets.

This implies a difference of treatment based on the entitlement's type.

Configuration

In order to handle this special case, Usercube expects, for now, a very precise configuration. A generic configuration element will come soon to be able to customize and apply this special treatment in other cases.

For now, for your EntityType representing Salesforce's Entitlements entity type, you need to

have an entity property with exactly type as identifier:

<Property Identifier="type" DisplayName_L1="Type" TargetColumnIndex="13" Type="String" />

And to map it in the Entitlements entity type mapping:

<Property Identifier="type" ConnectionColumn="type" />

That is all you need to do. With the next synchronization, all the entitlements will have their

type and then the special treatment can be done for those with the Profile type when

provisioning users' entitlements.

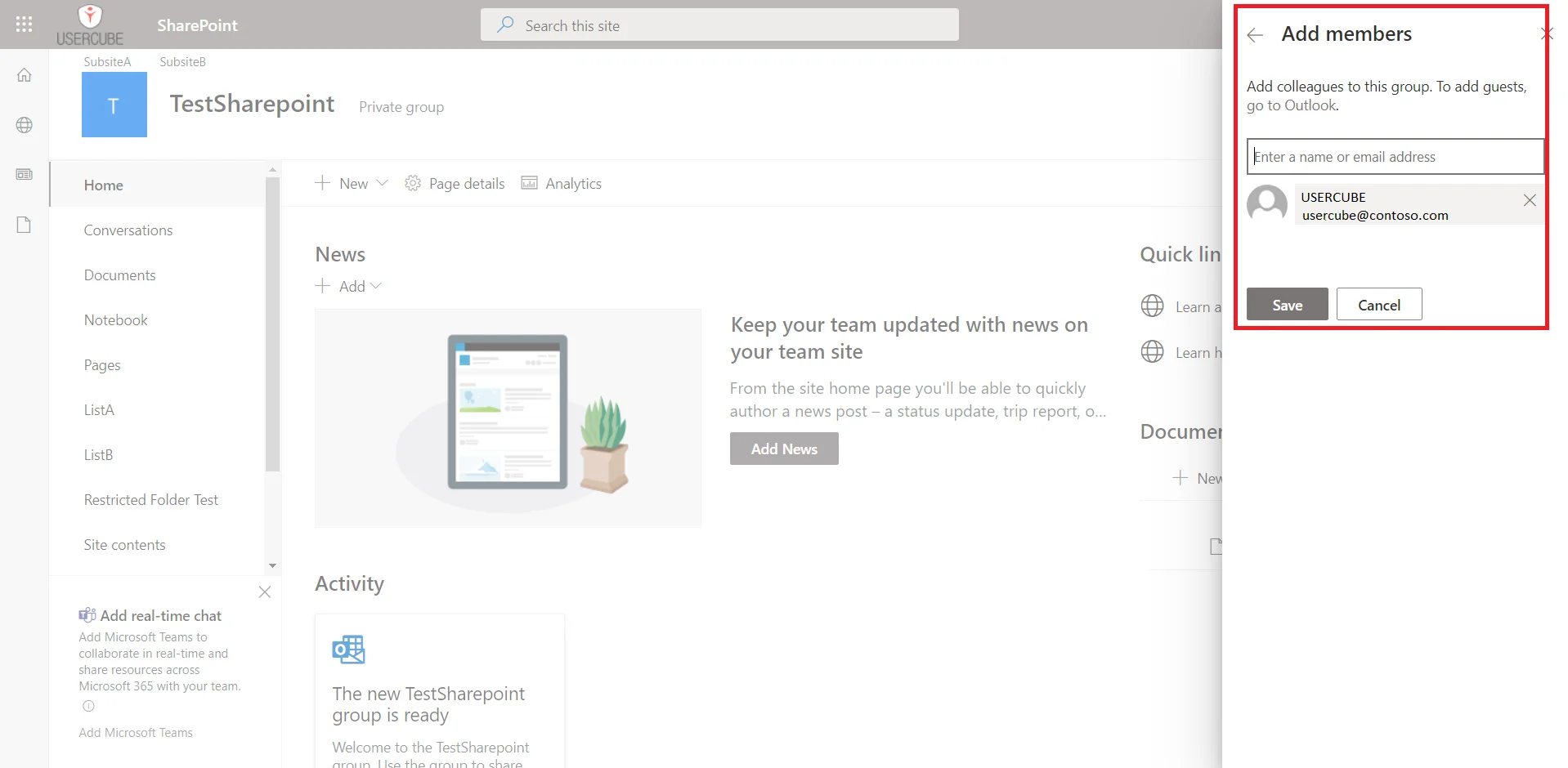

Set up SharePoint's Export and Synchronization

This guide shows how to set up a SharePoint connector to extract data from your SharePoint instance into CSV source files that will be fed to the Synchronization task and to your Usercube resource repository. It will focus on registering Usercube within the target SharePoint, configuring the connector, and building the job to perform a regularly scheduled synchronization.

Prerequisites

External system configuration

This step is designed to grant Usercube a service account to authenticate with the target SharePoint sites. It includes the following substeps: