How to Crawl a Website That Does Not Require Crede?

Question

When trying to scan a website that does not require credentials to access it, the following error appears:

Version: 5.7.0.58

Instance: abandc-test

Component: Collector Service

Level: Error

Page ID: 443213

CollectorEnumerator.CollectPage

Page Collection Exception

Exception: Location: CollectorEnumerator.CollectPage

A Web Exception 'Forbidden occured during processing -> The remote server returned an error: (403) Forbidden.

Details: Error: AccessDeniedError (-403)

Page Id: 443213

conceptCore.PageCollectionException: Location: CollectorEnumerator.CollectPage

A Web Exception 'Forbidden occured during processing ---> System.Net.WebException: The remote server returned an error: (403) Forbidden.

at System.Net.HttpWebRequest.GetResponse()

at conceptHttp.Collection.HttpPageCollector.Get(Boolean forceCollect, Boolean& changedSinceLastCollection)

at conceptEngine.ServiceFramework.Collection.CollectorEnumerator._()

--- End of inner exception stack trace ---

Inner Exception: System.Net.WebException: The remote server returned an error: (403) Forbidden.

at System.Net.HttpWebRequest.GetResponse()

at conceptHttp.Collection.HttpPageCollector.Get(Boolean forceCollect, Boolean& changedSinceLastCollection)

at conceptEngine.ServiceFramework.Collection.CollectorEnumerator._()

How to past this error when the site doesn't require any credentials?

Answer

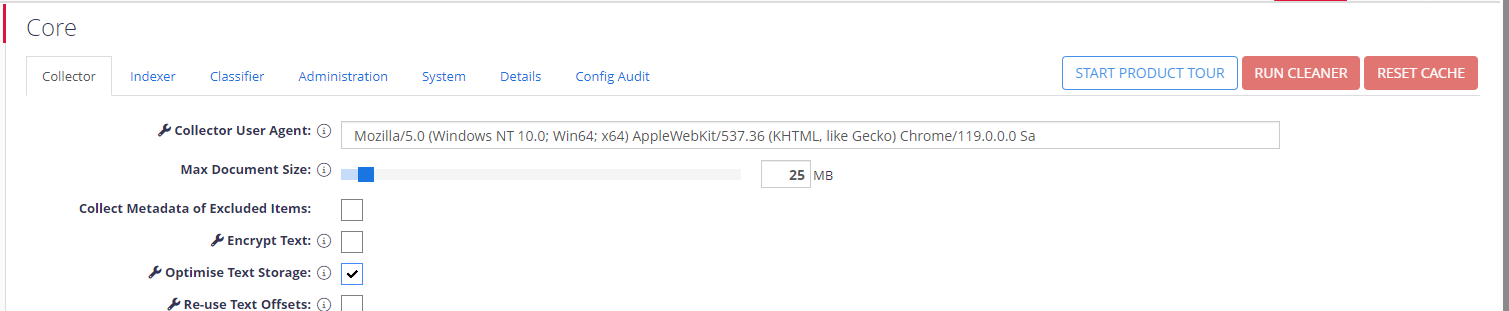

This error is gone after adding a specific line in the Collector Using Agent field. For that:

- In Netwrix Data Classification, navigate to Config -> Collector -> Advanced.

- Add the following line in the Collector Using Agent field:

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/119.0.0.0 Sa